Are S3 signed URLs secure?

The potential security problems with file distribution based on signed URLs

Signed URLs is a mechanism to distribute files when streaming them through the backend is not an option. This is especially the case in a Function-as-a-Service model, such as AWS Lambda. It works by separating the access control from the actual download by moving the latter to a dedicated service, in AWS's case to S3.

URL signing is primarily a security feature as it allows a targeted distribution of private files and as such any problems with it likely result in serious problems. This is why it's important to know what can go wrong and what impact an improper implementation might have on the whole system.

Let's see how the signing algorithm works and what can impact its security!

Algorithm

Signing a URL works by using the Secret Access Key to calculate a signature for a request then on AWS's side, the same key is used to check if the signatures match. The key itself never travels over the network and in this sense, it's not like a password.

One potential problem with this scheme is when an attacker can recover the secret key from a signed URL. Whether this is possible or not is dependent on the actual hash algorithm used in the protocol. In AWS's case, it is HMAC-SHA256, which is considered a strong hash function with a key that is long and seemingly random enough. Recovering the secret key from the signature is not possible. Moreover, modifying a portion of the URL, such as the filename, and fabricate a signature is also not possible.

The algorithm is secure.

If you follow the news you know that AWS is in the process of deprecating the signature version 2 and is migrating to version 4. This might indicate that there are some problems with the old version that warrants the deprecation. But the v2 also uses HMAC-SHA256, along with HMAC-SHA1, so there are no weaknesses on that algorithm either.

The benefit of the change is that version 4 provides better compartmentalization inside AWS by calculating a signing key from the Secret Access Key for each day, region, and service. With this, AWS does not need to distribute the Secret Access Keys to each region just the signing keys. If a region is compromised somehow, the Secret Access Key is still secure.

This change brings no additional security to the end-user.

What can go wrong

The algorithm used in the signing process is secure, but that does not mean that just by using signed URLs makes everything magically better. Several things can go wrong and especially when multiple services are working together even a small misconfiguration can result in security problems.

Let's see the most common and impactful problems and how to fix them!

Insufficient access control

Instead of flaws in the algorithm, the most likely source of a security incident is when an attacker can obtain a proper signed URL from your backend. In this case, AWS won't detect any malfeasance and will be happy to send the file.

The good news is that it is entirely in your control as you define the rules.

module.exports.handler = async (event, context) => {

// here is where the magic happens

if (checkAccess(event)) {

const signedUrl =

await s3.getSignedUrlPromise("getObject", params);

return {

statusCode: 200,

body: signedUrl,

};

} else {

return {

statusCode: 403,

body: "Forbidden",

};

}

};

Signing a URL means you allow downloading a file, so it's especially important to implement proper access checking. Treat a signed URL as the file itself and do a thorough access checking.

For example, a serious vulnerability is not checking the file at all but signing anything that the client requests, such as /sign?path=ebook.pdf.

If there is no button to alter this call then it's not immediately obvious, but if an attacker changes this to /sign?path=otherbook.pdf and gets

a valid signed URL as a response then the access check is failing.

An even worse scenario is when the backend can be tricked to sign a URL for a file outside the set of files users can download. For example, imagine

2 directories in a bucket: logs and ebooks. Users can download ebooks but they should not have access to the logs.

But because there might be only one signer entity, usually a Lambda role, it might have access to both directories. If the backend can be tricked to

sign a URL outside the ebooks directory, users can get access to the logs with confidential information.

A solution to this problem is to use a dedicated role that only has access to the files that users can download and nothing else. This separates the permissions of the signer entity from the execution role. See this post on how to implement this.

Long expiration time

The first security mistake usually made with signed URLs is to use a long expiration time. This turns them into capability URLs usually without any consideration of the consequences. Short expiration times make sure that the clients can lose only the current signed URLs and not the ones signed in the past.

Most cloud providers limit the maximum validity of the URL, usually to 7 days. This prompts people to find more secure solutions and not sign URLs valid for years, a practice not uncommon prior to when a short maximum was enforced.

Using long expiration times is not just insecure but also unnecessary. Signed URLs should be used right after signing which makes the default of 15 minutes more than enough.

The proper implementation is not signing and letting the user use the URL later but make sure the signing happens just before use. A possible solution is to send an HTTP redirect from the signing endpoint which automatically forwards the browser to the file. See this post for implementation details.

Improper URL security

A signed URL represents the right to download a file just by itself, there is no additional access checking to the user. In this sense, the URL is equivalent to the file itself, as with the former you can download the latter. It is important to make sure only the intended recipient has the URL.

URLs can be compromised in-flight, especially when transferring them via HTTP instead of HTTPS. The web is already moving towards using HTTPS everywhere, and that secures the signed URLs while they reach the user. Make sure you use HTTPS between the users and the backend.

Second, they can show up in the browser's history or the user can intentionally copy-paste and save them so a later compromise may leak the URLs also. The expiration mechanism of signed URLs helps with this as they become useless after a certain time has passed.

Using a short expiration time makes sure a security breach does not compromise old URLs as they are already expired and useless. Make sure to use expiration times as short as possible.

Misconfigured caching

Since signed URLs are time-sensitive---they expire---caching can be a problem in some cases. Caching can happen in two places: on the client-side and in a proxy.

Client-side caching of signed URLs means that if somebody has already downloaded a file he might still be able to download it again even if the URL is expired. Browsers utilize memory and disk-based caches that can still have the file and return it without contacting S3 to check validity.

This is usually not a problem as the user already has the file.

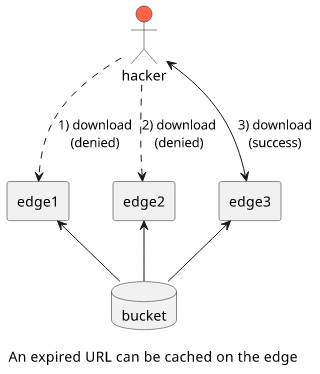

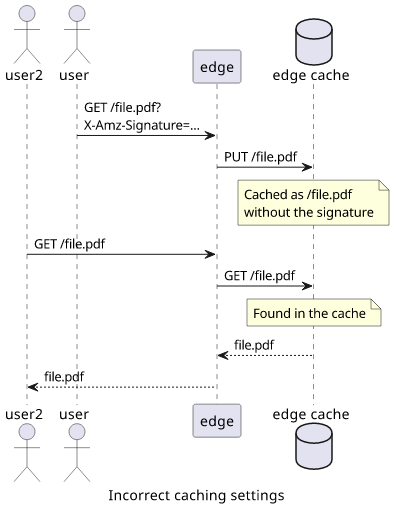

Proxy caching

Misconfigured proxy caching, on the other hand, is a serious problem.

Let's say you use CloudFront as a single point of contact and you utilize edge caching to speed up access for users. This provides a ton of benefits, like HTTP/2 support, no additional TLS handshake, simplified CSP, no CORS problems, and caching. When a user downloads a file the next user does not need to go all the way to the bucket but get it from a cache in closer proximity.

But what if a URL is expired? If it is found in the cache then the second request won't go all the way to S3 to check it. As a result, if somebody accesses a signed URL when it is valid it will be available for everybody close to that location. If an attacker gains access to an expired signed URL he can try to find an edge where the file is still available.

The solution is to set the proxy cache TTLs carefully. In CloudFront's case, you can set the min, the max, and the default TTLs. With these three, you can specify how long a given file will be cached on the edges.

The safest option is to disable proxy caching altogether by setting all TTLs to 0:

default_cache_behavior {

# ...

# Disable caching

default_ttl = 0

min_ttl = 0

max_ttl = 0

}

If you want edge caching then you need to limit cache expiration to an acceptable limit. But keep in mind that proxy caching time is added to the expiration time of the signed URL as if an object is added just before expiration it will be available for the duration of the cache. If you use the default 15 minutes for URL expiration and set up 30 minutes caching on CloudFront the effective expiration time will be between 15 - 45 minutes.

See this post for how to implement proper caching for signed URLs.

But a proxy can be misconfigured in an even worse way than just extending expiration. If the cache key does not include all query parameters then after somebody accessed a signed URL, everybody will get access to the file, even without a valid signature!

If you use proxy caching, make sure that it does not strip any query parameters. In the case of CloudFront, it's the Query String Forwarding and Caching. The dangerous setting here is the Forward all, cache based on whitelist. Make sure you cache and forward all query parameters.

Tradeoffs

Since singed URLs work differently than sending the file from the backend certain problems come with it. These are things that you can not do much about, but being aware of them helps to assess the security of your services.

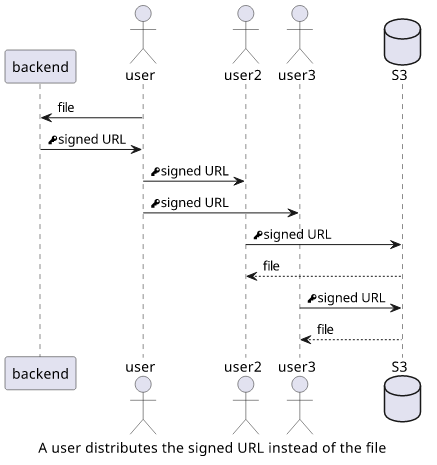

Bandwidth control

While you control access to the file via the signing procedure, you don't control bandwidth. If you sign a URL then you can't specify how many times it will be used and by whom. Note that this is not an information leak as the user can send the file itself after downloading it. This problem is related to cost.

Especially with large files, a user might want to send it to other users but instead of using his own bandwidth, he figures he can send the signed URL and they can download directly from S3. Sounds reasonable, but you, the service provider, foot the bill.

Short expiration helps in this case. If the URL is valid for only 15 minutes then, while still theoretically valid, it's a lot less likely.

Why is this a problem with signed URLs but not with the traditional solution where files are flowing through the backend? Two reasons.

First, in that case, the access control check and the download are not separated. For a user to download a file available only to another user they must share login credentials which is a lot less likely.

Second, by having all the traffic going through the backend you can monitor how many times a file is downloaded and who, as in which user and from what IP address, downloads it. If you see suspicious behavior then you can deny the download.

Implementation disclosure

While the Secret Access Key is not recoverable from the URL, the Access Key ID is part of the query parameters. It is not a problem as that is not considered a secret, but it still reveals some information about how the backend is implemented.

Observe the differences in the URL when signing with a role and with an IAM user.

Using a role to sign a URL:

https://s3.eu-central-1.amazonaws.com/bucket/file.pdf

?X-Amz-Algorithm=AWS4-HMAC-SHA256

&X-Amz-Credential=ASIA...

...

While signing with an IAM user:

https://s3.eu-central-1.amazonaws.com/bucket/file.pdf

?X-Amz-Algorithm=AWS4-HMAC-SHA256

&X-Amz-Credential=AKIA...

...

Notice the X-Amz-Credential parameter which is the Access Key ID. A

role starts with ASIA while for a user it's AKIA.

Why is this a problem?

The best practice is to use roles for anything automated instead of users and signing a URL on the backend is definitely in this category. Using an IAM user can indicate problems in the signing process which is then observable for the users.

Also, by comparing the Access Key IDs used for a length of time, an attacker might know the renewal policy which is also an implementation detail.

Revocation

There is no way to revoke individual URLs. There is an access check on the S3 side but that only checks whether the signer entity is allowed to get the file. You can remove that permission but that invalidates all signed URLs.

Using short expiration times help to shorten the downtime. Revoke the permissions, wait 15 minutes, then add it back. This way all previously signed URLs are expired and they can not be used to download any files. Keep in mind that if you use proxy caching that files might be available there.

But ultimately there is little you can do to prevent a misissued signed URL from being used.

Conclusion

Configured properly, signed URLs are secure. Four areas need care:

- Access control checking on the backend

- Expiration time setting

- URL security

- Proxy configuration

But if each of them gets the necessary attention, distributing files via URL signing is a safe solution.