Log review guided by tests

My second rant about the importance of the stuff in the logs.

This is a follow up of the undesired log output testing post. The issue I would like to invite you to think about is not just to check for unexpected events, but to ensure that the important information gets to the log file.

In case of an error the people responsible for the operation of a service have to take actions mostly based on the information provided in these files, which is why it's imperative to reduce the noise in the log by recording the relevant information with the relevant log level.

Checking log statements with dedicated tests seems cumbersome and unpractical to maintain. To control the contents of the log, I think it's a much better approach is the occasional review of the output, and that can be aided by the automatic testing infrastructure in many ways.

Generating reports to review

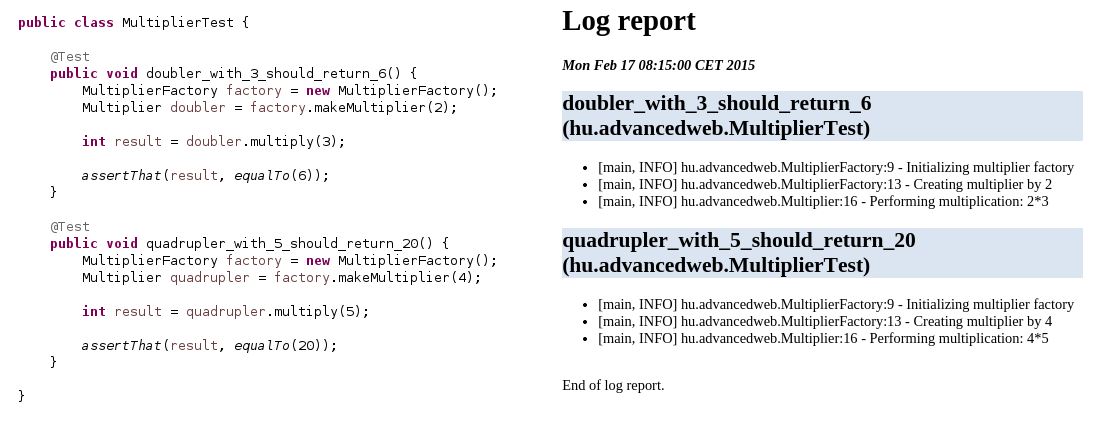

One is to record the log output for every feature test case to see what lines will be present in the log file after a particular scenario is executed. The generated reports can be checked periodically for problems. The report might contain some noise, but it can provide an opportunity to spot if something is really missing.

As discussed in the previous post, JUnit provides mechanism to intercept certain phases of the test execution, for example to execute custom assertions or reporting.

By creating and registering a similar log event listener as before, we can generate a report based on the log events occurred during the execution of the feature test suite.

import org.junit.runner.Description;

import org.junit.runner.Result;

import org.junit.runner.notification.RunListener;

...

public class LogEventListener extends RunListener {

private IntegrationTestAppender logger;

@Override

public void testRunStarted(Description description) throws Exception {

logger = new IntegrationTestAppender();

logger.setThreshold(Level.INFO);

Logger.getRootLogger().addAppender(logger);

writeToReport(renderHeader());

}

@Override

public void testStarted(Description description) throws Exception {

logger.clear();

}

@Override

public void testFinished(Description description) throws Exception {

writeToReport(renderTestExcerpt(description, logger.getEvents()));

}

@Override

public void testRunFinished(Result result) throws Exception {

writeToReport(renderFooter());

}

private String renderHeader() {

return new StringBuilder()

.append("# Log report\n")

.append("***" + new Date() + "***\n\n")

.toString();

}

private String renderTestExcerpt(Description description, List<LoggingEvent> events) {

StringBuilder sb = new StringBuilder();

sb.append("##" + description.getDisplayName() + "\n");

for (LoggingEvent event : events)

sb.append(" - " + format(event) + "\n");

return sb.toString();

}

private String format(LoggingEvent event) {

return new StringBuilder()

.append("[")

.append(event.getThreadName())

.append(", ")

.append(event.getLevel().toString())

.append("] ")

.append(event.getLocationInformation().getClassName())

.append(":")

.append(event.getLocationInformation().getLineNumber())

.append(" - ")

.append(event.getRenderedMessage())

.toString();

}

private String renderFooter() {

return new StringBuilder()

.append("End of log report.\n")

.toString();

}

private void writeToReport(String str) {

System.out.println(str); // preferably write to file

}

}During the operation of a software usually all log events with INFO or higher level are relevant, so the listener in this example registers the log appender accordingly before the test starts. It produces markdown output that can be easily converted into HTML.

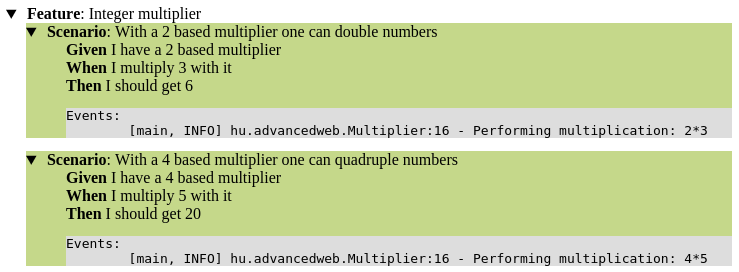

One problem with this approach is that the report will contain every log statement occurred during the whole test case, including those that are related to the necessary setup work. (Of course unit tests with mocks are less affected, but integration and feature tests inevitably contains such parts.) With a test structured like the example above, there might be not much that we can do about this, but with differently organized tests there is an opportunity to ignore certain phases of a scenario. For example with Cucumber a typical test looks like the following (with the necessary glue code):

Feature: Integer multiplier

Scenario: With a 2 based multiplier one can double numbers

Given I have a 2 based multiplier

When I multiply 3 with it

Then I should get 6

Scenario: With a 4 based multiplier one can quadruple numbers

Given I have a 4 based multiplier

When I multiply 5 with it

Then I should get 20Multiplier multiplier;

int result;

@Given("^I have a (\\d+) based multiplier$")

public void I_have_a_based_multiplier(int base) throws Throwable {

MultiplierFactory factory = new MultiplierFactory();

multiplier = factory.makeMultiplier(base);

}

@When("^I multiply (\\d+) with it$")

public void I_multiply_with_it(int number) throws Throwable {

result = multiplier.multiply(number);

}

@Then("^I should get (\\d+)$")

public void I_should_get(int expectedValue) throws Throwable {

assertThat(result, equalTo(expectedValue));

}This test is organized into multiple steps in a given-when-then (GWT) fashion, where the "when" part describes something that can happen in a typical real life situation, and the "given" and "then" parts are responsible for documenting the appropriate pre and expected post conditions. So usually, while the former one is what the scenario is really about, the log information related to the latter two can be ignored.

Cucumber provides Hooks to enable users to execute custom code before and after every test scenario, but executing additional code before or after the steps is more convenient with the JUnit listeners, as logically every step in a Cucumber scenario is a separate test for the JUnit. So, with a slightly modified version of the LogEventListener we can filter the relevant log events by keeping the ones generated for "when" parts of the scenarios.

To put the icing on the cake, Cucumber itself is able to present the test results in a nice looking HTML report. Powered with the adequate information collected by the LogEventListener, this report can be enhanced to contain additional information for every scenario.

Creating nice, digestible reports opens the opportunity for manual inspection. For example, during a routine checkup the reviewer can load the report from the previous inspection and the current one to a suitable text comparison tool, and be able to oversee differences.

As mentioned in the earlier post, there can be difficulties when the test suite runs in a different process. In this case, some additional work has to be done to create the report with all the necessary information. For example, the system under test might be able to generate the report if the identifiers of each test are provided by the testing application.

Further enhancements with automatic checks

A stricter approach is to programmatically compare the generated log output to a baseline. Based on the comparison various actions can be taken, like generate reports or send emails with the differences or even break the build. If the generated log output is strictly contrasted to a standard, it might cause a lot of false positive alerts, because of the slight differences in the individual log outputs, like seemingly random events from background tasks or insignificant ordering issues of the events.

So for practical reasons there is some leniency that needs to be used when dealing with the automatic inspection of the logs, as well as careful planning on designing how and what aspects of the output should be compared. One way to do it might be to create a log norm in a convenient format, that describes the important parts that the tests should emit during the execution. At the end of the test suite, this norm is the baseline to compare the actual log output. It's also important to decide how the actual check should work. It raises many questions specific to the task at hand, like how to handle

- when a test case logs more than the norm,

- the accidental noise,

- if the emitted log is a bit different, but contains the important information.

Another way might be to store the generated log reports for every build and provide comprehensive information about the change of the report over time, such as log size variation, or automatically generate diff reports that can be easily checked. This can be aided with the continuous integration and inspection infrastructure, that already keeps track many metrics of the software.

I think it's worth thinking about the more automated solutions, but generally the manual review is more feasible, as the requirements about the logs are usually not exactly specified. If a scenario has strong requirements about logging, it might be another option to turn the log checks into real tests, that has documentation value as well.