Detecting possibly duplicate Strings

This is a blog post about the algorithm of detecting possible duplicates from a set of Strings

Background

A few days ago I was collecting content for an app of mine, and after a while I had quite a lot. I wanted to filter out lines I had found multiple times from different sources, but the data set was beyond the size to do it manually. I've googled for a while for some Google Docs extensions that would do that, but none of them was working. I've some previous experience with text similarity, so I wrote a simple script to analyze the data set for me.

The problem

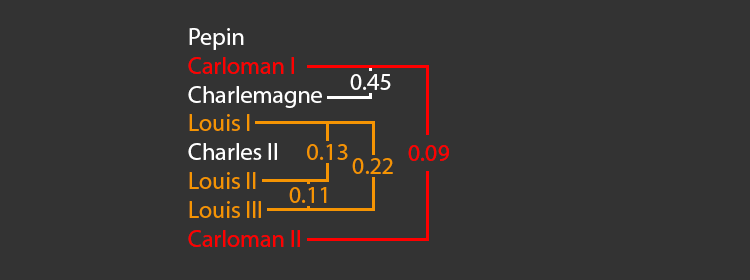

So the problem is: I have a text file with many lines and I want to know the most similar lines in it. Sadly this can not be fully automatized. For example, the world the and teh are very different, although they are just a mistype away, while French King Louis II and French King Louis VII seem very similar, they are actually 258 years away from each other. So an algorithm can only provide possible duplicates.

Finding similarities

Levenshtein distance

The central part of the algorithm is the Levenshtein distance. It basically calculates a distance of two texts, where every character deleted, inserted or modified means 1 step. So if the distance from 2 strings are small, there is only a few changes needed to make one from the other, whereas if the distance is large, there are many changes needed. This wiki page has a comprehensive set of languages the algorithm is implemented in. As I'm working with a Node.js script, I used the Javascript one:

// Compute the edit distance between the two given strings

function getEditDistance(a, b) {

if(a.length === 0) return b.length;

if(b.length === 0) return a.length;

var matrix = [];

// increment along the first column of each row

var i;

for(i = 0; i <= b.length; i++){

matrix[i] = [i];

}

// increment each column in the first row

var j;

for(j = 0; j <= a.length; j++){

matrix[0][j] = j;

}

// Fill in the rest of the matrix

for(i = 1; i <= b.length; i++){

for(j = 1; j <= a.length; j++){

if(b.charAt(i-1) == a.charAt(j-1)){

matrix[i][j] = matrix[i-1][j-1];

} else {

matrix[i][j] = Math.min(matrix[i-1][j-1] + 1, // substitution

Math.min(matrix[i][j-1] + 1, // insertion

matrix[i-1][j] + 1)); // deletion

}

}

}

return matrix[b.length][a.length];

};

Finding the closest pairs

Note: I'm using underscore.js when working with collections, as it makes it much clearer and easier.

Let's assume we have read the lines to a variable called lines, and it is an array and contains one element per line. First, we need to construct all the pairs from the texts. For this, we need to map the elements to return an array of the pairs, keeping in mind that we don't want to include the same pair in a reverse order too. This is done with the following snippet:

_.chain(lines)

.map(function(line,idx,list){

return _.chain(list)

.rest(idx+1)

.map(function(other){return [line,other]})

.value()

})

Note the map function's arguments: the first is the actual element, the second is the actual index, and the third is the whole list. These all are needed here. Also, we can not compare the strings in order to avoid the reverse pairs to end up in the result, as there can be duplicates. So we need to use the index here. This produces the following:

['a','b','c'] => [[['a','b'],['a','c']],[['b','c']],[]]

In order to get the pairs in an array, we need to flatten the array 1 level:

.flatten(true)

This gives us:

['a','b','c'] => [['a','b'],['a','c'],['b','c']]

Next, calculate the distance between all pairs and order them in a decreasing way:

.map(function(pair){

return [pair[0],pair[1],getEditDistance(pair[0],pair[1])];

})

.sortBy(function(qs){

return qs[2];

})

This gives us:

['a','b','c'] => [['a','b',1],['a','c',1],['b','c',1]]

So far so good, it's almost done. But let's look at a corner case! Consider the following lines:

this is a very long line

this is a very very long line

egg

ham

bee

cup

This gives us the 3 letter words first, and only then the longer lines, although they are very different and the longer lines are very similar. To overcome this, we need to normalize the distance to be proportional to the length of the lines (the longer one in the pair actually). To do this, simply divide the distance with the longer text:

return [pair[0],pair[1],getEditDistance(pair[0],pair[1]) / Math.max(pair[0].length,pair[1].length)];

This way we get the correct result:

[ [ 'this is a very long line', 'this is a very very long line', 0.1724137931034483 ],

[ 'this is a very long line', 'egg', 0.9166666666666666 ],

...

]

Performance

The running time is clearly O(n^2) as we need to calculate all pairs because we need to order them. For a few hundred lines it ran in a few seconds, and I think it should be alright for a few thousands at least.