Secure tempfiles in NodeJS without dependencies

Handling temporary files and directories the right way

Tempfiles use-cases

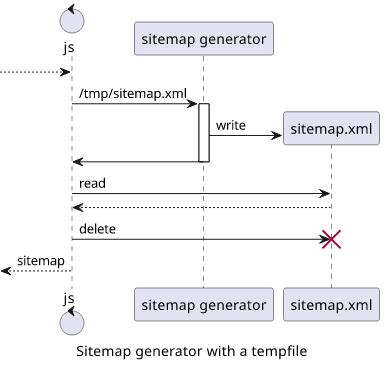

Recently, I've been working on a static site generator, and I needed to implement how the sitemap.xml is generated. The whole project was a Node HTTP

server, generating the whole site on-the-fly, no files are written to the disk. To generate a sitemap I decided to use a crawler that maps the site and

returns the XML. When there is a request to /sitemap.xml, the crawler does its thing, then the HTTP server sends back the XML. This fits nicely into

the functional architecture.

One library to crawl the site and generate the sitemap is the sitemap generator package. But this requires a file to save the generated sitemap, and I could not find an easy way to extract the result otherwise.

// what should be the filepath?

const generator = sitemapGenerator(url, {

filepath: file,

});

Well, just put the sitemap somewhere, then delete it later. From the outside, it looks like a functional solution.

// tmpFile is a random filename in /tmp

const generator = sitemapGenerator(url, {

filepath: tmpFile,

});

// ...generate the sitemap.xml

// read the results

const result = await fsPromises.readFile(tmpFile, "utf8");

// delete the temporary file

await fsPromises.unlink(tmpFile);

// tmpFile is gone

Sometime later I worked on a PDF generator and I needed to convert the pages of a PDF to images. Luckily, there is Ghostscript which is the de-facto tool for anything related to PDFs.

Its documentation is rather lengthy, and it discusses how Ghostscript can be used with pipes instead of files for input/output. But the problem is that the PDF -> image generation produces multiple files and there is no mention of how to handle that with pipes. This left me with a solution similar to the one above, just with a temp directory:

// tmpDir/1.png, tmpDir/2.png

const child = spawn("gs",

["-q", "-sDEVICE=png16m", "-o", `${tmpDir}/%d.png`, "-r300", "-"],

{stdio: ["pipe", "pipe", "pipe"]}

);

The solution is the same as above: create a temporary directory, make Ghostscript put the files there, read the files, then delete them.

So it all boils down to one step: to create a temporary directory. Sounds simple, right? os.tmpdir() returns the directory where programs can put there

stuff that will be cleaned up after some time, and from there it's just a matter of generating a sufficiently random filename.

When I started working on this post my plan was to introduce the Tempy library and how easily it solves this problem.

Then I started reading.

And man, tempfiles are a mess.

Solution

Let's jump into the solution first, then discuss why it works this way in the following chapters.

I wanted a solution that is:

- Secure and promotes secure use

- Cleans up after itself

- Uses as few dependencies as possible

And here is the result, with exactly zero dependencies:

// fs is promises!

const fs = require("fs").promises;

const os = require("os");

const path = require("path");

const withTempFile = (fn) => withTempDir((dir) => fn(path.join(dir, "file")));

const withTempDir = async (fn) => {

const dir = await fs.mkdtemp(await fs.realpath(os.tmpdir()) + path.sep);

try {

return await fn(dir);

}finally {

fs.rmdir(dir, {recursive: true});

}

};

To use it, copy-paste it into your project and use the two functions, depending on whether you need a file or a directory.

The sitemap generator works like this:

const sitemap = await withTempFile(async (file) => {

// file is the temp file location

await new Promise((res, rej) => {

const generator = sitemapGenerator("http://localhost:3000/", {

filepath: file,

});

generator.on("done", res);

generator.on("error", rej);

generator.start();

});

return await fs.readFile(file, "utf8");

});

// the sitemap file is gone

And using with Ghostscript:

const images = await withTempDir(async (tmpDir) => {

// tmpDir is created

await new Promise((res, rej) => {

const child = spawn("gs",

["-q", "-sDEVICE=png16m", "-o", `${tmpDir}/%d.png`, "-r300", "-"],

{stdio: ["pipe", "pipe", "pipe"]}

);

child.stdin.write(pdf);

child.stdin.end();

child.on("exit", () => {

res();

});

child.stderr.on("data", (data) => {

rej(data);

});

});

const files = await fs.readdir(tmpDir);

return Promise.all(

files

.sort(new Intl.Collator(undefined, {numeric: true}).compare)

.map((filename) => fs.readFile(`${tmpDir}/${filename}`))

);

});

// tmpDir is gone

Why tempfiles are hard

So, the above code looks simple, why do I say tempfiles are hard?

Well, this piece of code has a very high thinking/characters ratio, that's for sure. And while there are libraries out there that provide more or less the same functionality (tempy and tmp are the two main ones), but I've found they don't promote secure usage and they are a bit heavyweight as most of their dependencies are now built into Node itself.

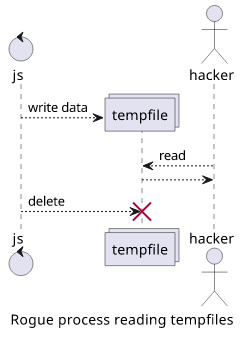

Problem #1: Information leakage

This mostly boils down to file permissions which is the primary problem with tempfiles. And it's surprisingly hard to set them up right.

There are two kinds of problems with software: one that affects functionality and one that does not. Since something is considered "broken" when it does not work, the first category gets way more attention. Even the most rudimentary solution to tempfiles works just fine, it will pass QA and will be marked as "Done".

But the other category of problems is way more interesting and encompasses most security problems. The tempfile solution works just fine, but do you know

no rogue process is watching for newly created files in /tmp and siphons off anything that gets written there? The PDF with the

sensitive info that a user has just uploaded, for example.

And it is especially hard to see this opportunity exists with short-lived tempfiles that are deleted a few milliseconds later.

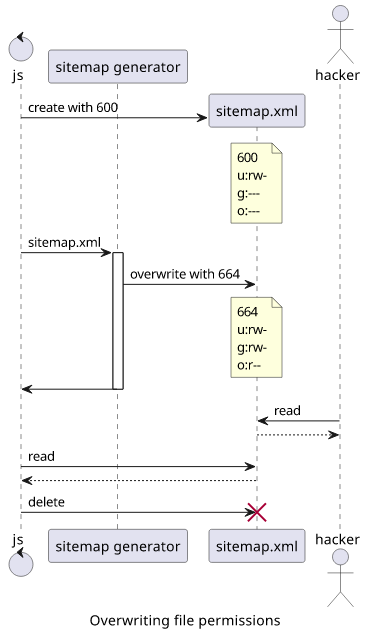

Tempfile permissions

The tmp library goes to great lengths to set restrictive permissions on tempfiles so that only the owner has access to them. But unfortunately, it's not enough.

I noticed that the sitemap generator library just overwrites the file with more permissive access:

// https://www.martin-brennan.com/nodejs-file-permissions-fstat/

const getPermissions = async (path) => {

const stats = await fs.stat(path);

return "0" + (stats.mode & parseInt("777", 8)).toString(8);

};

// Step 1: create a file with restrictive permissions

await (await fs.open(file, "w", 0o600)).close();

console.log(await getPermissions(file));

// 0600, all is good

// Step 2: use the tempfile

await new Promise((res, rej) => {

const generator = sitemapGenerator("http://localhost:3000/", {

filepath: file,

});

generator.on("done", res);

generator.on("error", rej);

generator.start();

});

// Step 3: check the permissions

console.log(await getPermissions(file));

// 0664, ouch

So it's not enough to create a file that is locked down as something down the line can just overwrite it with a different permission set. And you can't assume libraries will just do the "right thing".

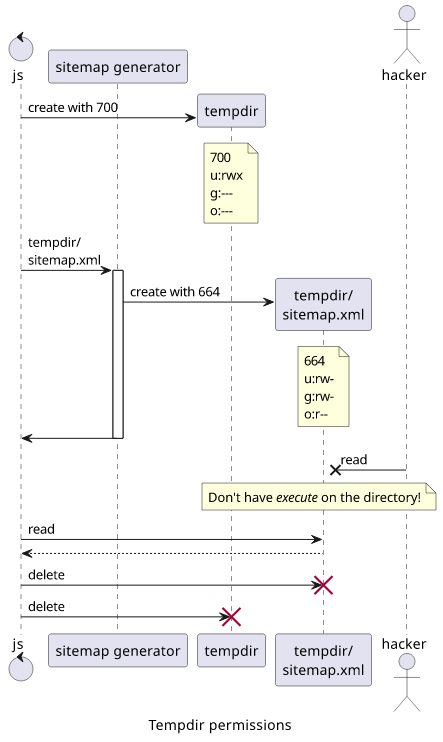

Solution: Restricted directories

The solution is to use the execute permission of a parent directory. Lacking this permission prevents the users from doing anything inside, no matter what permissions the files have.

$ mkdir securedir

$ echo "secret" > securedir/secret.txt

$ cat securedir/secret.txt

secret

# without execute permission

$ chmod -x securedir

$ cat securedir/secret.txt

cat: securedir/secret.txt: Permission denied

This yields a solution to the tempfile problem: instead of a file, create a directory that is locked down and put the file there. No matter how that file is used down the line and what permissions it gets, it stays secure.

Problem #2: Temp directory location

Putting files to /tmp works in most cases, but is it universal?

No, for example, Windows uses a different place, and also systems might want to designate a different directory. So don't assume /tmp is the place to put tempfiles.

The os.tmpdir is better, as it picks up the tmp location in a configurable way. But it seems like there are problems with it too as it can be a symlink in some cases.

Fortunately, Node provides a utility to solve the symlink problem, so combining the two parts, the reliable solution to find out the tempdir location is:

fs.realpath(os.tmpdir())

Problem #3: Unique filenames

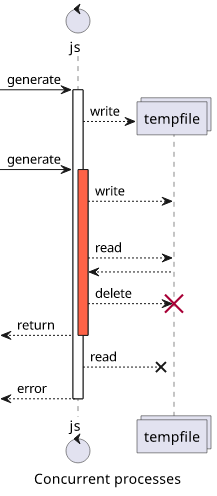

This one is more of a functional than a security problem as if the same code runs in parallel it can overwrite the files previously created. No matter if NodeJS is single-threaded, if you use async fs functions (which you should), the net effect is parallel execution. Because of this, you need to make sure to include randomness in the filename.

But even with random filenames, insufficient randomness can affect security in two ways.

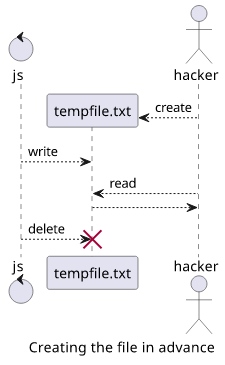

First, if an attacker can know the filename in advance, he can create the file in advance with lax permissions or some predetermined content that can corrupt something downstream.

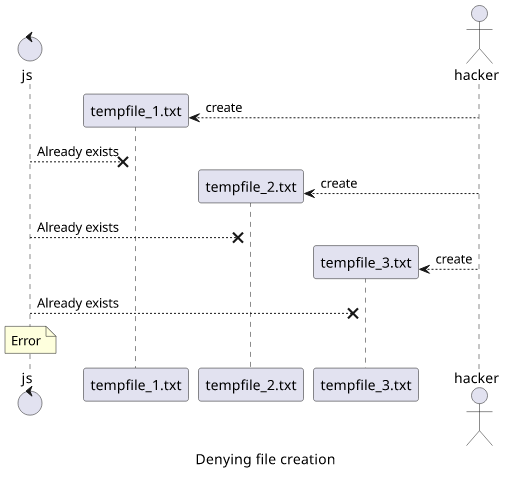

Second, he might deny creating it repeatedly, leading to errors in the application itself.

Tempfile libraries go great lengths to provide randomness for the file path, usually relying on crypto. This is random enough, but it is not needed.

It is a solved problem already, and there is the NodeJS function fs.mkdtemp that not only

takes care of randomness and uniqueness but also of setting the necessary permissions. Just make sure to use path.sep at the end for platform independence.

const dir = await fs.mkdtemp(await fs.realpath(os.tmpdir()) + path.sep);

Tempy takes care of this, but tmp does not.

Problem #4: Cleanup

The temp folder is handled by the operating system and it makes sure it will be cleaned up eventually. Combined with the unique filenames discussed above, it makes sure it won't run out of space and getting errors. But it still means that clutter accumulates, even though with an upper limit. Seems like there is no need to dispose of the files when they are not needed in the application. This is the route Tempy takes.

But there are two problems with this approach.

The first one is practical. The OS cleans up eventually, but when? The temp directory might have a lot of files which slows down everything that operates on

the directory level, such as ls. And when the OS does clean it up, it has no way of knowing when something is really not needed and there is a slight

chance it will remove something it shouldn't. Just by deleting the files no longer needed, the application can be a good citizen who cleans up after itself.

The second problem is related to security. If tempfiles contain sensitive information then you want to get rid of them as soon as possible, even when using the correct permissions. If there is a flop, you don't want to expose a long list of sensitive files. With auto-cleanup at least you limit the potential fallout.

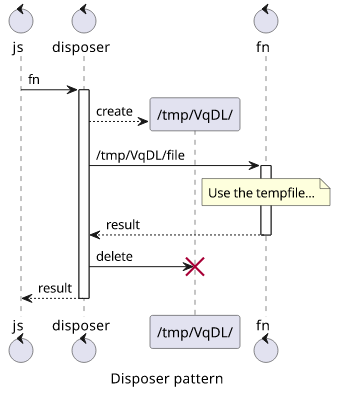

Disposer pattern

And this auto-cleanup does not have to be complicated either and it can be made the default. If you are familiar with Java, the try-with-resources is a similar construct.

It works by passing a function and wrap the call to that function with a try-finally construct. The finally makes sure no matter how

the function invocation ends, the cleanup is called:

const disposerFn = async (fn) => {

// setup param

try {

return await fn(param);

}finally {

// cleanup param

}

};

const result = await disposerFn(async (param) => {

// use param

});

This makes auto-cleanup the default without thinking about it. And since it's an async function, it supports async/await and Promises too.

The tmp library goes to great lengths to also delete the files when the process exits. This closes a rare condition where shutting down the app leaves

tempfiles as in that case the finally does not run. But it's still not a bulletproof solution as killing the app can't clean up.

How to delete directories

The rimraf library is the de-facto standard to recursively and reliably delete a directory. It does not seem to do much

apart from calling rm -rf, but there are surprisingly many edge cases it needs to handle.

But starting from Node 12, it is built-in into the platform as

the fs.rmdir(path, {recursive: true}) function. Interestingly, it's rimraf

simply copied.

fs.rmdir(dir, {recursive: true});

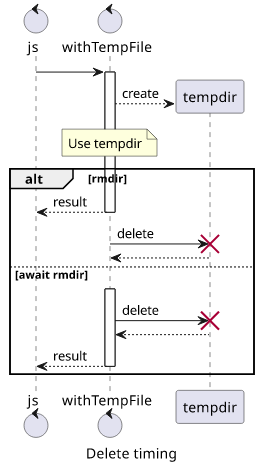

To await or not to await

Libraries that do cleanup usually use await to make sure the files are deleted

before returning. I believe there is no need to wait for the cleanup just make sure it will be done eventually.

The more significant difference is whether an error should mean the call is unsuccessful or will it be treated as an uncaught Promise rejection.

Conclusion

Using tempfiles should be a last resort and it's better to use pipes in most cases. But sometimes they are inevitable, and in this case, make sure you take into account all the nuances especially when potentially sensitive data is involved.