How to use the AWS Lambda streaming response type

It enables faster and quicker response times as well as higher limits on the body size

Lambda response type

Lambda recently added support for streaming responses that lets functions send data to clients while they are still running. Originally, the response the function returned was sent to clients after it was available fully. This was called the "buffered" mode as the Lambda runtime buffers the response.

Buffered response

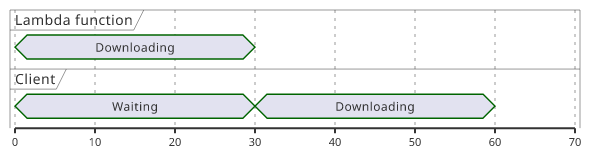

To see how it can increase latency, consider what happens if a function reads and returns an object stored in S3. In the buffered case, it needs to read the contents fully, return to the Lambda runtime, and only then the client can get the bytes.

import { S3Client, GetObjectCommand } from "@aws-sdk/client-s3";

import { buffer } from "node:stream/consumers";

export const handler = async (event) => {

const client = new S3Client();

const res = await client.send(new GetObjectCommand({

Bucket: process.env.Bucket,

Key: file,

}));

return {

statusCode: 200,

headers: {

"Content-Type": res.ContentType,

},

body: (await buffer(res.Body)).toString("base64"),

isBase64Encoded: true,

};

}

Streaming response

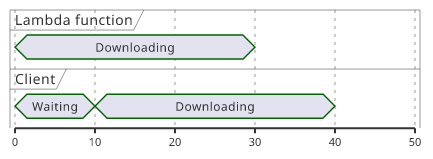

Streaming response is different as it allows the client to get the response as it becomes available to the function itself. With the above S3 example, when the function receives the first bytes it can push them without waiting for the the whole file.

import { S3Client, GetObjectCommand } from "@aws-sdk/client-s3";

import {pipeline} from "node:stream/promises";

export const handler = awslambda.streamifyResponse(async (event, responseStream) => {

const client = new S3Client();

const res = await client.send(new GetObjectCommand({

Bucket: process.env.Bucket,

Key: file,

}));

await pipeline(res.Body, awslambda.HttpResponseStream.from(responseStream, {

statusCode: 200,

headers: {

"Content-Type": res.ContentType,

},

}));

});

Streaming vs buffering

This setup provides multiple benefits over the buffered approach.

First, streaming means the whole file does not need to fit into the memory of the Lambda function. As bytes arrive from the GetObject operation those

bytes are pushed to the result stream and after that can be discarded by the function.

Second, reading and writing are interleaved in time, providing shorter response times. Instead of waiting for the full file to download once for the Lambda function and then to the client, the two processes happen in parallel.

Third, the client gets the first bytes sooner. This is especially important when it can do something useful with partial responses. For example, an image might be shown in a lower resolution while the file is still downloading, which is a better user experience.

And finally, the Lambda runtime provides a higher limit for the response size in this mode. While a buffered response can only go up to 6 MB, streaming supports up to 20 MB.

Implementation

To use streaming responses, use the awslambda.streamifyResponse function available in the Node runtime to wrap the handler function. This exposes the

responseStream value:

export const handler = awslambda.streamifyResponse(async (event, responseStream) => {

// ...

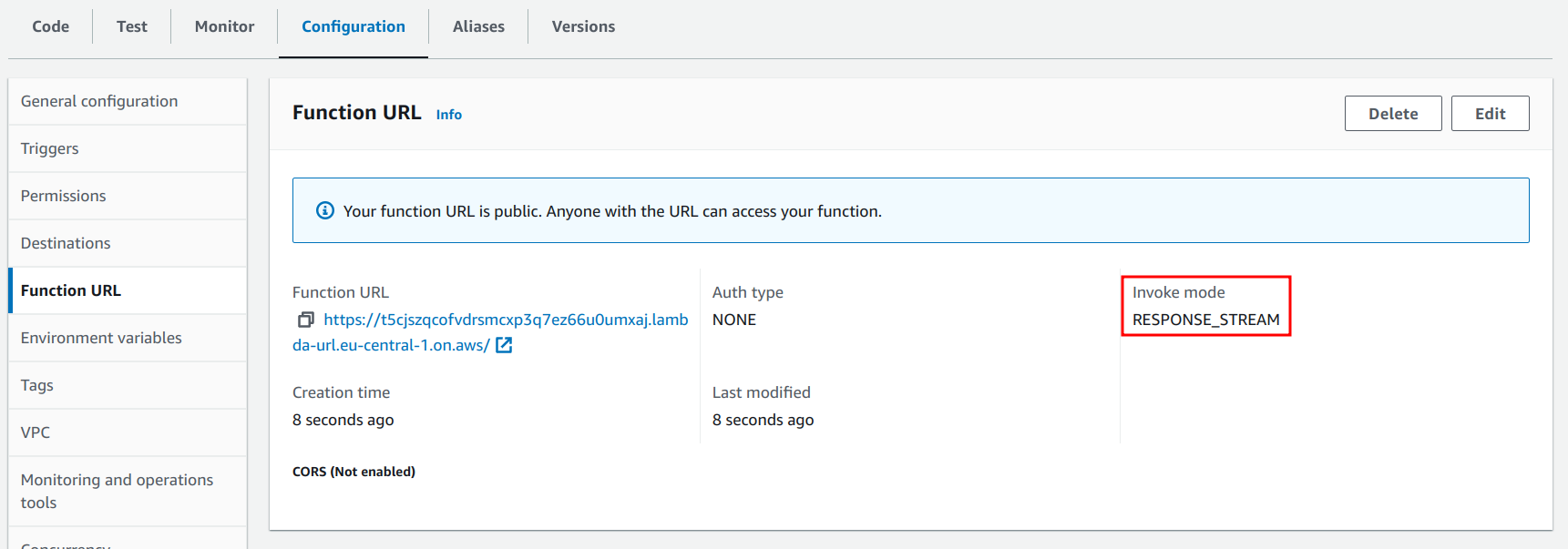

});Next, the function URL needs to know in which mode to call the function:

resource "aws_lambda_function_url" "streaming" {

function_name = aws_lambda_function.streaming.function_name

authorization_type = "NONE"

invoke_mode = "RESPONSE_STREAM"

}

Headers and cookies

The buffered response type supports returning arbitrary headers and cookies in the response. This is supported with streaming responses as well by another

utility function awslambda.HttpResponseStream.from(responseStream, metadata):

await pipeline(res.Body, awslambda.HttpResponseStream.from(responseStream, {

statusCode: 200,

headers: {

"Cache-control": "no-store",

"Content-Type": res.ContentType,

},

cookies: ["testcookie=value"],

}));The awslambda.HttpResponseStream.from returns another writeable stream that needs to be used instead of the wrapped one.

The above code provides a generic structure to define the response. The res.Body is a readable stream that is piped into the response stream, and the

status code, headers, and cookies are defined in the metadata parameter.

Tests

Let's run a couple of tests to see what speedup streaming provides over buffering!

For this, I'll use 3 test files:

- small: 0.2 MB

- medium: 4.4 MB

- large: 13.8 MB

For each of these files, I'll measure the total response time using the autocannon library and the time to first byte using ttfb.

Small (0.2 MB)

Buffered:

npx autocannon -c 1 $(terraform output -raw small_buffered)┌─────────┬────────┬────────┬────────┬────────┬───────────┬───────────┬────────┐

│ Stat │ 2.5% │ 50% │ 97.5% │ 99% │ Avg │ Stdev │ Max │

├─────────┼────────┼────────┼────────┼────────┼───────────┼───────────┼────────┤

│ Latency │ 228 ms │ 302 ms │ 649 ms │ 649 ms │ 343.21 ms │ 102.41 ms │ 649 ms │

└─────────┴────────┴────────┴────────┴────────┴───────────┴───────────┴────────┘Streaming:

npx autocannon -c 1 $(terraform output -raw small_streaming)┌─────────┬────────┬────────┬─────────┬─────────┬───────────┬───────────┬─────────┐

│ Stat │ 2.5% │ 50% │ 97.5% │ 99% │ Avg │ Stdev │ Max │

├─────────┼────────┼────────┼─────────┼─────────┼───────────┼───────────┼─────────┤

│ Latency │ 196 ms │ 238 ms │ 1331 ms │ 1331 ms │ 299.25 ms │ 217.79 ms │ 1331 ms │

└─────────┴────────┴────────┴─────────┴─────────┴───────────┴───────────┴─────────┘A second run, apparently with less deviation:

┌─────────┬────────┬────────┬────────┬────────┬───────────┬──────────┬────────┐

│ Stat │ 2.5% │ 50% │ 97.5% │ 99% │ Avg │ Stdev │ Max │

├─────────┼────────┼────────┼────────┼────────┼───────────┼──────────┼────────┤

│ Latency │ 168 ms │ 205 ms │ 387 ms │ 415 ms │ 218.83 ms │ 52.07 ms │ 415 ms │

└─────────┴────────┴────────┴────────┴────────┴───────────┴──────────┴────────┘The numbers are a bit smaller compared to the buffered case, but I did not find much difference between the two.

TTFB:

npx ttfb -n 10 $(terraform output -raw small_buffered) $(terraform output -raw small_streaming)... fastest .156445 slowest .303719 median .206308

... fastest .118235 slowest .179110 median .151772Again, the numbers show a slight improvement compared to the buffered case, but not substantial.

Medium (4.4 MB)

Buffered:

npx autocannon -c 1 $(terraform output -raw medium_buffered)┌─────────┬─────────┬─────────┬─────────┬─────────┬─────────┬───────┬─────────┐

│ Stat │ 2.5% │ 50% │ 97.5% │ 99% │ Avg │ Stdev │ Max │

├─────────┼─────────┼─────────┼─────────┼─────────┼─────────┼───────┼─────────┤

│ Latency │ 3890 ms │ 3890 ms │ 4036 ms │ 4036 ms │ 3963 ms │ 73 ms │ 4036 ms │

└─────────┴─────────┴─────────┴─────────┴─────────┴─────────┴───────┴─────────┘Streaming:

npx autocannon -c 1 $(terraform output -raw medium_streaming)┌─────────┬─────────┬─────────┬─────────┬─────────┬───────────┬──────────┬─────────┐

│ Stat │ 2.5% │ 50% │ 97.5% │ 99% │ Avg │ Stdev │ Max │

├─────────┼─────────┼─────────┼─────────┼─────────┼───────────┼──────────┼─────────┤

│ Latency │ 1658 ms │ 1766 ms │ 2600 ms │ 2600 ms │ 2039.5 ms │ 368.6 ms │ 2600 ms │

└─────────┴─────────┴─────────┴─────────┴─────────┴───────────┴──────────┴─────────┘This time the difference is very much noticable: even the fastest of the buffered case is slower than the slowest of the streaming ones.

TTFB:

npx ttfb -n 10 $(terraform output -raw medium_buffered) $(terraform output -raw medium_streaming)... fastest 2.09754 slowest 4.14310 median 2.490555

... fastest .132218 slowest .687806 median .145763As expected, the TTFB did not increase with the file size in the streaming case. On the buffered case, the delay is very noticable.

Large (13.8 MB)

Since the buffered version does not allow returning this file, there is no point comparing the response times, so I just ran the TTFB test.

npx ttfb -n 10 $(terraform output -raw large_streaming)fastest .122149 slowest .183837 median .160531Slow downloads

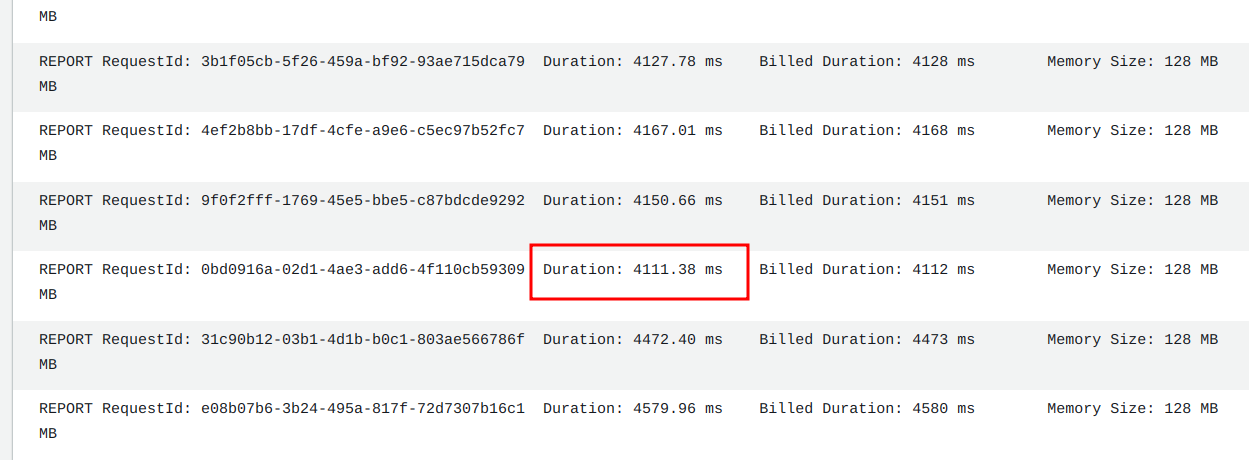

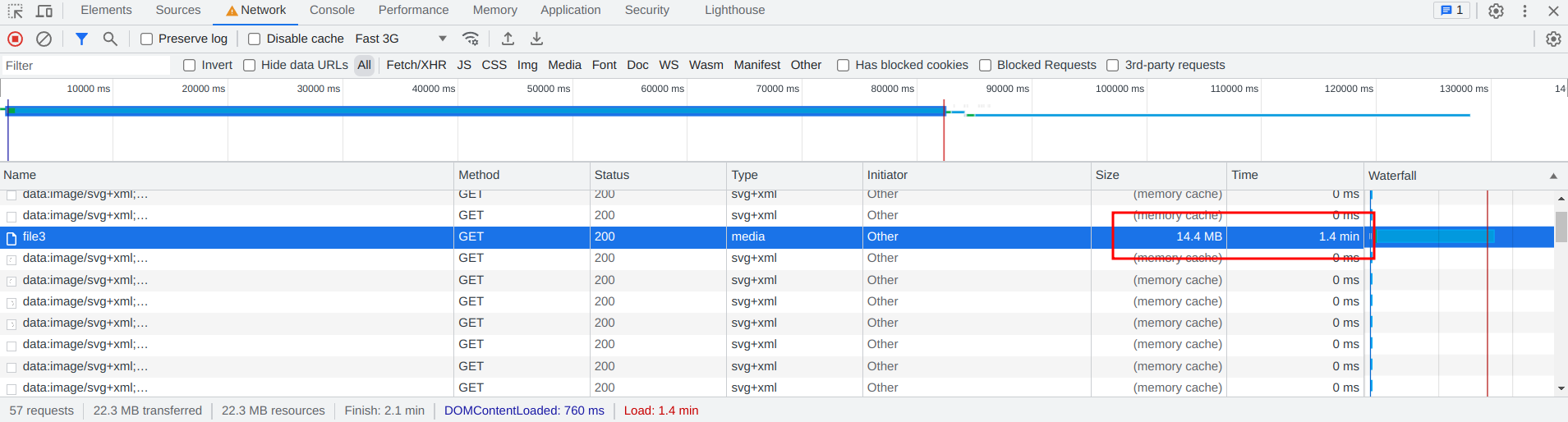

A Lambda function is sensitive to runtime both in terms of hitting a timeout and that a slow function is more expensive than quick ones. This prompted the question: what happens if the client that is consuming the response is slow? Would the function be stuck waiting for the output be consumed by the client?

Fortunately, no. The Lambda runtime does buffering in this case as well and the transfer time of the client does not affect the function execution.

In this case the download took more than a minute and still finished successfully, even though the function execution timeout was set to 30 seconds:

And the function's execution did not take longer than in other cases: