How to use async functions with Array.map in Javascript

Return Promises in map and wait for the results

In the first article, we've covered how async/await helps with async commands but it offers little

help when it comes to asynchronously processing collections. In this post, we'll look into the map function, which is the most

commonly used as it transforms data from one form to another.

The map function

The map is the easiest and most common collection function. It runs each element through an iteratee function and returns an array with the results.

The synchronous version that adds one to each element:

const arr = [1, 2, 3];

const syncRes = arr.map((i) => {

return i + 1;

});

console.log(syncRes);

// 2,3,4

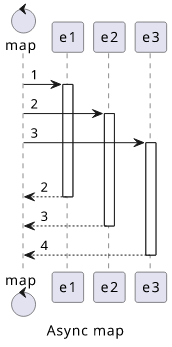

An async version needs to do two things. First, it needs to map every item to a Promise with the new value, which is what adding async before the function

does.

And second, it needs to wait for all the Promises then collect the results in an Array. Fortunately, the Promise.all built-in call is exactly

what we need for step 2.

This makes the general pattern of an async map to be Promise.all(arr.map(async (...) => ...)).

An async implementation doing the same as the sync one:

const arr = [1, 2, 3];

const asyncRes = await Promise.all(arr.map(async (i) => {

await sleep(10);

return i + 1;

}));

console.log(asyncRes);

// 2,3,4

Concurrency

The above implementation runs the iteratee function in parallel for each element of the array. This is usually fine, but in some cases, it might consume too much resources. This can happen when the async function hits an API or consumes too much RAM that it's not feasible to run too many at once.

While an async map is easy to write, adding concurrency controls is more involved. In the next few examples, we'll look into different solutions.

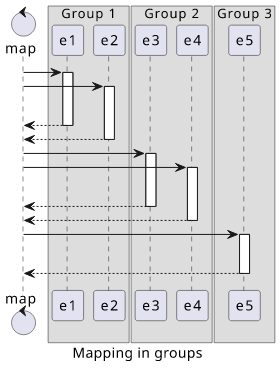

Batch processing

The easiest way is to group elements and process the groups one by one. This gives you control of the maximum amount of parallel tasks that can run at once. But since one group has to finish before the next one starts, the slowest element in each group becomes the limiting factor.

To make groups, the example below uses the groupBy implementation from Underscore.js. Many libraries provide

an implementation and they are mostly interchangeable. The exception is Lodash, as its groupBy does not pass the index of the item.

If you are not familiar with groupBy, it runs each element through an iteratee function and returns an object with the keys as the results and the values

as lists of the elements that produced that value.

To make groups of at most n elements, an iteratee Math.floor(i / n) where i is the index of the element will do. As an example, a group

of size 3 will map the elements like this:

0 => 0

1 => 0

2 => 0

3 => 1

4 => 1

5 => 1

6 => 2

...

In Javascript:

const arr = [30, 10, 20, 20, 15, 20, 10];

console.log(

_.groupBy(arr, (_v, i) => Math.floor(i / 3))

);

// {

// 0: [30, 10, 20],

// 1: [20, 15, 20],

// 2: [10]

// }

The last group might be smaller than the others, but all groups are guaranteed not to exceed the maximum group size.

To map one group, the usual Promise.all(group.map(...)) construct is fine.

To map the groups sequentially, we need a reduce that concatenates the previous results (memo) with the results of the current group:

return Object.values(groups)

.reduce(async (memo, group) => [

...(await memo),

...(await Promise.all(group.map(iteratee)))

], []);

This implementation is based on the fact that the await memo, which waits for the previous result, will be completed before moving on to the next line.

The full implementation that implements batching:

const arr = [30, 10, 20, 20, 15, 20, 10];

const mapInGroups = (arr, iteratee, groupSize) => {

const groups = _.groupBy(arr, (_v, i) => Math.floor(i / groupSize));

return Object.values(groups)

.reduce(async (memo, group) => [

...(await memo),

...(await Promise.all(group.map(iteratee)))

], []);

};

const res = await mapInGroups(arr, async (v) => {

console.log(`S ${v}`);

await sleep(v);

console.log(`F ${v}`);

return v + 1;

}, 3);

// -- first batch --

// S 30

// S 10

// S 20

// F 10

// F 20

// F 30

// -- second batch --

// S 20

// S 15

// S 20

// F 15

// F 20

// F 20

// -- third batch --

// S 10

// F 10

console.log(res);

// 31,11,21,21,16,21,11

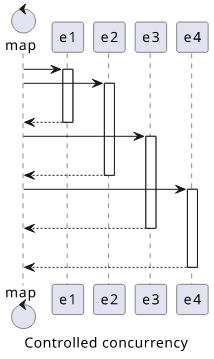

Parallel processing

Another type of concurrency control is to run at most n tasks in parallel, and start a new one whenever one is finished.

I couldn't come up with a simple implementation for this, but fortunately, the Bluebird library

provides one out of the box. This makes it straightforward, just import the library

and use the Promise.map function that has support for the concurrency option.

In the example below the concurrency is limited to 2, which means 2 tasks are started immediately, then whenever one is finished, a new one

is started until there are none left:

const arr = [30, 10, 20, 20, 15, 20, 10];

// Bluebird promise

const res = await Promise.map(arr, async (v) => {

console.log(`S ${v}`)

await sleep(v);

console.log(`F ${v}`);

return v + 1;

}, {concurrency: 2});

// S 30

// S 10

// F 10

// S 10

// F 30

// S 20

// F 10

// S 15

// F 20

// S 20

// F 15

// S 20

// F 20

// F 20

console.log(res);

// 31,11,21,21,16,21,11

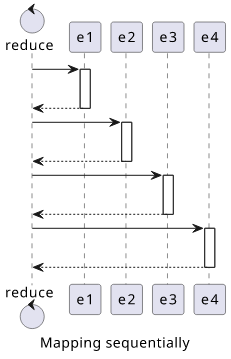

Sequential processing

Sometimes any concurrency is too much and the elements should be processed one after the other.

A trivial implementation is to use Bluebird's Promise with a concurrency of 1. But for this case, it does not warrant including a library as a simple reduce

would do the job:

const arr = [1, 2, 3];

const res = await arr.reduce(async (memo, v) => {

const results = await memo;

console.log(`S ${v}`)

await sleep(10);

console.log(`F ${v}`);

return [...results, v + 1];

}, []);

// S 1

// F 1

// S 2

// F 2

// S 3

// F 3

console.log(res);

// 2,3,4

Make sure to await the memo before await-ing anything else, as without that it will still run concurrently!

Conclusion

The map function is easy to convert to async as the Promise.all built-in does the heavy lifting. But controlling concurrency requires some planning.

An example is available on GistRun

An example is available on GistRun