How to define Lambda code with Terraform

Terraform offers multiple ways to upload code to Lambda. Which one to choose?

Background

Terraform's archive_file is a versatile data source that can be used to create a zip file that can be feed into Lambda. It offers several ways to define the files inside the archive and also several attributes to consume the archive.

Its API is relatively simple. You define the data source with the zip type and an output filename that is usually a temporary file:

# define a zip archive

data "archive_file" "lambda_zip" {

type = "zip"

output_path = "/tmp/lambda.zip"

# ... define the source

}Then you can use the output_path for the file itself and the output_base64sha256 (or one of the similar functions) to detect changes to the contents:

# use the outputs

resource "..." {

filename = data.archive_file.lambda_zip.output_path

hash = data.archive_file.lambda_zip.output_base64sha256

}Let's see a few examples of how to define the contents of the archive and how to attach it to a Lambda function!

Attach directly

First, let's see the cases where the archive is attached as a deployment package to the aws_lambda_function's filename attribute.

Add individual files

These examples add a few files into the archive. These are useful for small functions.

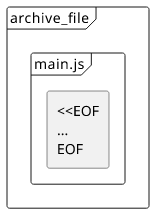

Inline source

If you have only a few files to upload and they are small, you can include them directly in the Terraform module.

This works by including one or more source blocks and using the heredoc

syntax to define the code. This is a convenient way for functions no longer than a couple of lines.

data "archive_file" "lambda_zip_inline" {

type = "zip"

output_path = "/tmp/lambda_zip_inline.zip"

source {

content = <<EOF

module.exports.handler = async (event, context, callback) => {

const what = "world";

const response = `Hello $${what}!`;

callback(null, response);

};

EOF

filename = "main.js"

}

}

resource "aws_lambda_function" "lambda_zip_inline" {

filename = "${data.archive_file.lambda_zip_inline.output_path}"

source_code_hash = "${data.archive_file.lambda_zip_inline.output_base64sha256}"

# ...

}Make sure that the output_path is unique inside the module. If multiple archive_files are writing to the same path they can collide.

Terraform interpolations are working inside the template, which uses the same syntax as Javascript template interpolations (${...}). To escape

on the Terraform-side, use $${...}.

The Lambda function needs the source_code_hash to signal when the zip file is changed. Terraform does not check the contents of the file

that's why a separate argument is needed.

You can add multiple files with multiple source blocks.

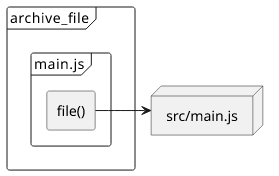

file() interpolation

When you have longer functions, directly including them in other Terraform codes becomes problematic. If you still only have a few files, you can use the file() function that inserts the contents from the filesystem.

data "archive_file" "lambda_zip_file_int" {

type = "zip"

output_path = "/tmp/lambda_zip_file_int.zip"

source {

content = file("src/main.js")

filename = "main.js"

}

}

resource "aws_lambda_function" "lambda_file_int" {

filename = "${data.archive_file.lambda_zip_file_int.output_path}"

source_code_hash = "${data.archive_file.lambda_zip_file_int.output_base64sha256}"

# ...

}This way there is no need to escape ${...} as file() takes care of that automatically

You might be tempted to use the templatefile function to provide values, but don't.

Use environment variables for that.

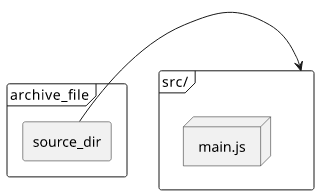

Add directories

With a more complex function, you'll likely reach the limit of listing individual files, especially when you start using NPM and that generates a sizeable

node_modules directory. To include a whole directory, use the source_dir argument:

data "archive_file" "lambda_zip_dir" {

type = "zip"

output_path = "/tmp/lambda_zip_dir.zip"

source_dir = "src"

}

resource "aws_lambda_function" "lambda_zip_dir" {

filename = "${data.archive_file.lambda_zip_dir.output_path}"

source_code_hash = "${data.archive_file.lambda_zip_dir.output_base64sha256}"

# ...

}This works great for most projects, but the configuration options are very limited. You can not include more than one directory inside an archive, and you also can't exclude subfolders or files.

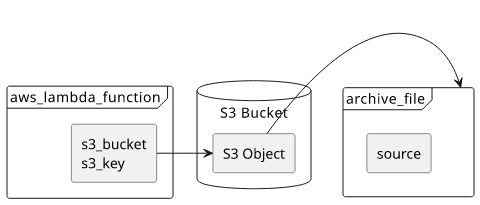

Use S3

Another possibility is to use an S3 object to store the code instead of attaching it directly to the function. On the Lambda side, you can use the s3_bucket

and the s3_key arguments to reference the archive.

resource "aws_s3_bucket" "bucket" {

force_destroy = true

}

resource "aws_s3_bucket_object" "lambda_code" {

key = "${random_id.id.hex}-object"

bucket = aws_s3_bucket.bucket.id

source = data.archive_file.lambda_zip_dir.output_path

etag = data.archive_file.lambda_zip_dir.output_base64sha256

}

resource "aws_lambda_function" "lambda_s3" {

s3_bucket = aws_s3_bucket.bucket.id

s3_key = aws_s3_bucket_object.lambda_code.id

source_code_hash = "${data.archive_file.lambda_zip_dir.output_base64sha256}"

# ...

}Notice that you need to add the archive hash in two places: first, Terraform needs to update the S3 object (etag), then it needs to update the Lambda

(source_code_hash). If you omit any of them you'll see the old code is running after an update.

Conclusion

After CloudFormation's awful package step, Terraform's archive_file is

a blessing. No matter which approach you use, you'll end up with a speedy update process and, with just a bit of planning, no stalled code.