Tips to debug NodeJS inside Docker

How to efficiently develop Dockerized NodeJS apps

When building a NodeJS app there are a lot of tools available to help with the development process. A single line can restart the code when anything is changed,

files created by the app are easy to inspect, and there is a full-blown debugger baked into Chrome that can be attached to a running instance. With just a

minimal amount of setup when I add a console.log I see the result in a few seconds, or add a breakpoint and observe the internal state.

But every time I make the transition and move the app into Docker, it seems like all my tools are broken. Even simple things like using Ctrl-C to stop the app do not work, not to mention that the whole thing becomes opaque.

I usually start the development using only the host machine, without any further abstractions. But more often than not eventually I need a dependency that might not be installed and I don't want to clutter the system I use daily. Or I want to fix the versions to make sure the setup is reproducible on different machines.

Dockerizing the app improves it architecturally, but I often feel that it's a giant step backwards in terms of developer productivity.

This article describes a few tips that brings the same developer experience of a non-Dockerized app to one that runs inside a container.

Restart on change

The first thing every development environment needs is an easy way to restart the system under development to see the changes. With a NodeJS running without any further abstractions this is provided out of the box, just press Ctrl-C, up arrow, then enter. This takes care of killing the app and restarting it.

But when it's running inside Docker this simple practice does not work anymore.

Let's see the causes and how to remedy them!

Build and run

While running a NodeJS app does not require a separate build step thanks to the interpreted nature of the language, Docker needs to build the image before it can be run. As a result, you need to use two commands instead of one. What's worse is that Docker assigns a random name to the newly created image and it's surprisingly hard to extract and use that in the next command.

Fortunately, Docker supports image tags which lets you define a fixed name for the image which can be reused for the docker run command.

Using image tagging, this builds and runs the code in one command:

docker build -t test . && docker run ... test

Ctrl-C

Now that the container is running, it's time to stop it. Unfortunately, Ctrl-C does not work anymore. The first instinct I have in this situation is to

open a new terminal window, use docker ps to find out the container ID, then use docker kill <id> or docker stop <id> to stop it. But this

kills not only the container but all the productivity I had without Docker.

The root cause is NodeJS handles the TERM signal differently in this case and instead of shutting down it ignores it.

Fortunately, the signals are available inside the app and it can handle them by shutting down. To get back the original working, capture the SIGINT and SIGTERM signals and exit the process:

["SIGINT", "SIGTERM"].forEach((signal) => process.on(signal, () => process.exit(0)));

For this to work, run the container with the -i flag, and you might want to remove this code in production.

Npm install

With the above tricks we are almost back to the restarting experience of the pre-Docker setup. But depending on how the Docker build is structured, the build process is possibly a lot slower than before. This happens when npm install runs for every build, even when no dependencies are changed.

A typical first iteration of a Dockerfile is to setup the environment, then add the code, then initialize it. In case of a NodeJS app, the last step is usually to run npm.

COPY . .

RUN npm ci

While this script works and it faithfully reproduces how a non-Dockerized build runs, it is not optimal in terms of rebuilding.

Docker utilizes a build cache that stores the result of each step when it builds the Dockerfile. When the state is the same before running a step than when it

was cached, Docker skips the step and uses the cached result. But when the COPY moves all the files into the image, the state before the RUN npm ci

will depend on all of the files. Even if you modify a single line in the index.js which has nothing to do with npm, Docker won't use the cache.

And the result is that rebuilding the image is painfully long.

The solution is to separate the package.json and the package-lock.json from the other parts of the codebase, as suggested in the docs. This allows Docker to use the cached result of RUN npm ci unless the package jsons are different.

COPY package*.json ./

RUN npm ci

COPY . .

Also create a .dockerignore file and list the node_modules in it. This makes sure that the installed dependencies are not copied to the image.

node_modules

With this setup, npm ci will only run when needed and won't slow down the build process unnecessarily. This brings down the incremental build time to a few

seconds, which is acceptable for development.

Auto-restarting

One of my favorite development tool is nodemon as it saves me tons of time in 2-seconds increments. It watches the files and restarts the app when one of them changes. It does not sound groundbreaking, but those manual restarts that take 2 seconds each quickly add up.

It was designed to work with NodeJS projects, but it can be configured to support any setup. And it can also be used with npx so no prior installation is

needed besides a recent npm.

To restart the container whenever a file is changed, use:

npx nodemon --signal SIGTERM -e "js,html" --exec 'docker build -t test . && docker run -i test'

The --signal defines that the SIGTERM will be used to stop the app. With the code snippet that catches and kills the process, this terminates the app.

The -e lists the file extensions to watch. Since nodemon has defaults with a NodeJS app in mind, you can use this to support other scenarios.

Then the -exec defines the command to run. In this case it builds and starts the container.

When using this command, every change results in a restart automatically. No more Ctrl-C, up arrow, enter needed.

Expose files

Another feature of Docker that hinders development is that it uses a separate filesystem. In a particular case the app I worked on used a lot of intermediary

files in the /tmp directory. When the app was running on the host machine it was easy to inspect these files. With Docker, it's a bit more complicated.

Fortunately, there are multiple ways to regain visibility of the files.

Open a shell

The easiest way is to get a shell inside the container and use the standard Linux tools to inspect the files. Docker supports this natively.

First, use docker ps to get the container ID, then open a bash shell inside the container:

docker exec -it <container ID> bash

Copy the files to the host machine

While Linux tools are great to inspect files, they are insufficient in some cases. For example, when the file I'm interested in is a video then terminal-based tools are not enough. In this case, I need to transfer the files to the host in some way.

The easiest way is to mount a debug directory that is shared by the two systems and copying files there makes them available on the host.

This command creates a debug directory on the host and mounts it to /tmp/debug:

mkdir -p debug && \

npx nodemon --signal SIGTERM -e "js,html" --exec 'docker build -t test . && docker run -v $(pwd)/debug:/tmp/debug -i test'

With this setup, use the terminal to cp the files there or you can also programmatically export files with fs.copyFile(<src>, path.join("/tmp", "debug", <filename>)).

Attach a debugger

It's also possible to get serious and attach a debugger, such as Chrome's built-in Devtools. For this to work, start the app with the --inspect flag:

CMD [ "node", "--inspect=0.0.0.0:9229", "index.js" ]

Using 0.0.0.0 opens the debugger to every host and I'm unsure it's needed because of Docker or my development setup that requires multiple port forwarding.

Make sure that you understand that it opens the debugger to every host which means everyone who can connect to the host machine can attach to it.

The --inspect instructs NodeJS to listen on the 9229 port for debuggers, but we also need to expose that from the container:

docker run -p 9229:9229 ...

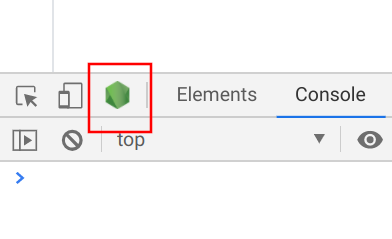

With these two configs, open Chrome and connect to the app. You can do this either by using the Node logo on the top left:

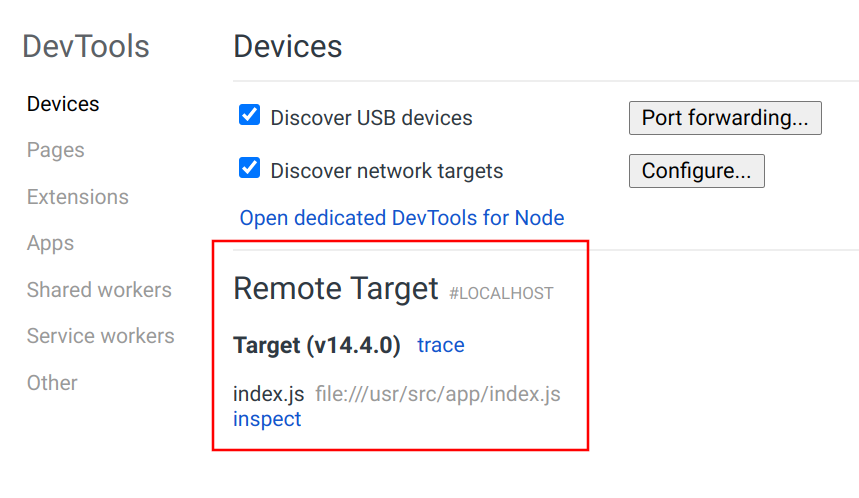

Or open chrome://inspect and select the app:

When the debugger is attached, you can set breakpoints, inspect runtime state, and you can do pretty much everything that you can for local apps running inside Chrome. I've found that stopping the execution at some point then opening a shell inside the container to inspect the files is a powerful way to debug apps.

Conclusion

With some preparations, you can have the best of both worlds: the isolation and reproducibility of a Dockerized application, and the ease of development of one that is running natively.