Practical measurement of the Android Backup Manager

This is a post about some important practical factors of the Android Backup Manager

Android Backup Manager

The Android Backup Manager offers an easy way to add backup capabilities to an Android app. It has extensive documentation how to do a backup and a restore, and also offers some helpers for easy SharedPreferences and File backuping. It also has a great advantage as a developer does not need to know the underlying architecture, as it is integrated into the platform. Also the app does not need any special permissions to be backupable, just a quick (and automatically approved) registration is needed.

The downside is that the process is a black box, there is no insight how it works and also there are only a few guarantees. The documentation says that when the app requests a backup, it will be run in an appropriate time, but there is no indication when it will be. Also there is no info about the maximum amount of data that can be stored. These makes the Backup Manager kind of unreliable, as it might work well most of the time, but it's near impossible to figure out what's wrong when it breaks.

Frequency of backups

One of the most important unknown aspect is the frequency of the backup. Let's make a test for it! Fortunately the Backup Agent has access to the app's Context thus it can read/write the SharedProperties, and that makes a two-way communication with the backup process and the app possible. Also, if we call dataChanged() from the onBackup() method, it gets registered just as it would have been when called from the app.

So we just need to trigger dataChanged() from the app, then also call it from the Agent, and write a log with the current times with every call to onBackup().

I've ran it for a while, and here are the results:

2014-12-05T14:43:17.344+0100:Backup called

2014-12-05T15:47:38.326+0100:Backup called

2014-12-05T16:47:13.920+0100:Backup called

2014-12-05T17:46:42.494+0100:Backup called

2014-12-05T18:47:57.966+0100:Backup called

2014-12-05T19:47:36.153+0100:Backup called

2014-12-05T20:47:13.981+0100:Backup called

2014-12-05T21:47:45.010+0100:Backup calledBased on these, the backup runs roughly every hour. Also I saw that it does not matter if the device is on WiFi or mobile data, the backup runs either way. If the updating is turned off for the device, then the backups are ceased too. Also if I called the dataChanged(), the first backup was not ran immediately.

Maximum size of a backup

The other important factor is the amount of bytes we can reliably store in a backup. If an app has a moderate database, it might be important to know whether it can use the built-in option, or it must use an external solution.

The first thing I noticed is that there is absolutely no error reporting. An app can write any amount of data to the BackupDataOutput, the only error symptom is that the next restore does not gets the updated data. There is no Exception, or logging of any kind. This is a major drawback, as to check whether a backup is successful or not you need to call a restore and see into the data manually.

The next surprising thing is the previous data is not erased in case of a backup. If there is a key key1 with some data, then the next backup writes key2, then both will be available during the next restore. If you need to remove a key, you can send -1 as the size. Also, as the BackupDataOutput does not contain the keys available on the remote backup, you need to know which keys you want to delete.

Third, the BackupDataInput does not preserve the ordering of the keys. When I added some data with a for-cycle, I got back these:

2014-12-04T12:05:09.411+0100:Restore data contained [data:0(100000),data:1(100000),data:10(100000),data:11(100000),data:12(100000),data:13(100000),data:14(100000),data:15(100000),data:16(100000),data:17(100000),data:18(100000),data:19(100000),data:2(100000),data:20(100000),data:3(100000),data:4(100000),data:5(100000),data:6(100000),data:7(100000),data:8(100000),data:9(100000)]You can see the keys are ordered lexicographically, and not in the order I put them in.

The good thing is that it seems like there is no difference writing many entities to few ones as long as the overall size is the same. So practically a whole database dump can be fit to a single entity, and no need to fragment it.

Regarding the size, it seems like both the current amount of data and the data already backed up takes part in the limit. I could store 3MB data, but then another 3MB failed, but 1MB succeeded. After that, another 3MB was fine. In my tests, 2MB could be stored consistently.

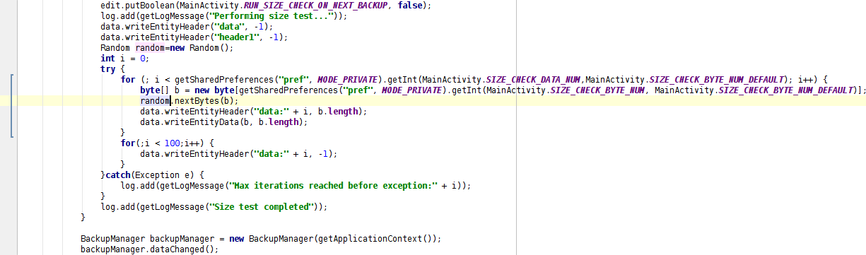

The tester app

You can find the tester app on Github with the link below. The usage is simple:

- Clear log button: Self-explanatory

- Call datachanged: Calls dataChanged() the first time to trigger the backups

- Will run size check on next backup: If on, then the next backup will try to store data. The backup process resets it, so it will only run once.

- Data# and Byte#: Controls how many data items will be stored and how big they will be

- Request restore: Calls onRestore() to see what's currently backed up

To force a backup, just call:

adb shell bmgr runConclusion

Based on the above observations, the Backup Manager can be a good solution to provide an easy-to-implement backup for your apps. If you have only a small amount of data, it would run reliably, and it results in happy users. If you need to store more data or provide historical or named backups, then it would fall short on features and reliability.