Optimized SPA deployment CloudFormation template

Speed up your web application with optimized deployment using cache headers and a CDN

A typical SPA deployment consists of two types of assets: dynamic and static. The former, usually the index.html, can change its content upon version

change, while the latter, files like 7a5467.js, cannot without renaming. Modern compilers support this use-case with a technique called revving,

that renames the file and all references to it to contain the hash of the contents.

With this setup, the static files are non-cached, while all the other assets can be safely kept forever. Configuring a CDN this way can greatly enhance the speed your visitors experience when browsing your site.

Speeding up your site with a CDN

Let's first discuss the various advantages of using a CDN for asset distribution. First, it provides proxy caching which helps when multiple users are accessing the site from close proximity from each other. After a user requests a given resource that can be cached there is no need to go to the origin the next time but return it from this cache.

Second, a CDN provides edge locations which are closer to the users. This means faster TCP handshake and TLS negotiation as they happen with less latency. Another benefit is the CDN's ability to reuse the connection to the origin, so even when it needs to fetch the content from there, the handshake from the user does not need to go all the way. This reduces the perceived latency significantly.

Also, a CDN can provide HTTP/2 support even if the origin can not. This is the case with S3 buckets, as those are accessible only via HTTP/1.1.

And lastly, while not strictly an advantage of the CDN, properly set HTTP cache headers mean the client does not even need to download the static files the second time.

Caching dynamic assets

The index.html is considered a dynamic asset, and as a rule of thumb, it should not be cached at all. But in some circumstances, a short cache time can

provide great benefits without running the risk of a stale cache.

In this case, the index.html changes only after deployments, and it is the same for all users. Caching, therefore, is a tradeoff between

freshness after deployment and cache performance.

With a cache time of just 1 second, you can cap the load on your origin, no matter how many visitors you have. There are a limited number of edge caches (11, at the time of writing) which means your origin can have at most 11 requests/second. And the cost is only a single second after each deployment.

Results

The naive approach of hosting the files directly from S3 yields these results measured by Chrome Audits:

With an optimized setup utilizing a CDN, the same test yields much better results:

And this improvement is recorded only by simulating a first load and not taking into account the client-side caching for returning visitors and the other advantages of using a CDN.

Setting up and tearing down

Deploy the cloudformation template to the us-east-1 region. It will take

~20 minutes to finish as creating a CloudFormation Distribution is an unusually slow process.

After the stack is deployed, it outputs the URL and an S3 bucket. Whatever you put into the bucket will be served through the CDN and all files,

except for the index.html, will be cached on both the proxy and the client.

To deploy a site to an S3 bucket, use this script:

aws s3 sync build s3://<bucket> --delete --exclude '*.map'Deleting the stack

When you want to delete the stack, you need to keep 2 things in mind:

First, the bucket has to be empty. Unfortunately, there is no built-in way to clear a bucket before deleting it, so you need to do it manually:

aws s3 rm s3://<bucket> --recursiveSecond, you need to delete it in 2 stages. It uses Lambda@Edge to add the cache headers and that needs a few hours (!) to propagate deletion. Issue a delete to the stack, then wait a bit, and delete it again.

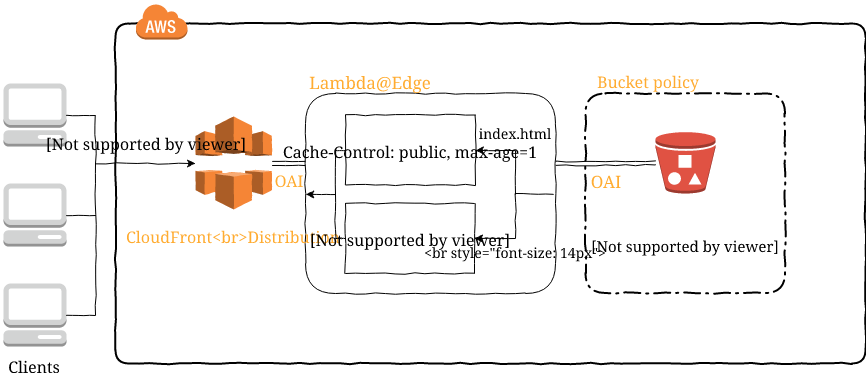

Solution overview

The solution is built on a CloudFront distribution and a Lambda@Edge function to provide the cache headers. This way, there is no need to add any metadata to the uploaded files, everything will be taken care of using only the filenames.

Bucket

The stack creates a bucket that holds the files. An Origin Access Identity is configured so that users can not access the contents directly and the bucket is configured to allow only the OAI. While the information in the bucket is public, it is a best practice to minimize the surface of your deployments.

CloudFront Distribution

A distribution is set up configured with the bucket and the OAI. It uses HTTP/2, compression, a redirect to HTTPS, and optimal cache header forwarding.

One interesting problem I faced is that the global S3 endpoint for a bucket <bucket>.s3.amazonaws.com takes a few hours (!) to come online and before

that happens it returns a redirect. A better approach is to use a regional endpoint of <bucket>.s3.<region>.amazonaws.com that comes online

immediately. Because of this, the template uses the regional endpoint.

Lambda@Edge

A function is called on the edge for the origin-response event. This makes sure that its result is also cached and not run for every single request. This

practice lowers the cost to virtually nothing.

As I've learned, Lambda@Edge requires the function to be in the us-east-1 region and won't work in any other one. Due to this, you need to deploy the

stack in that region.

Also, the distribution requires a specific version of the function which the template takes care of.

Conclusion

While SPA speed is usually more influenced by client-side processing and API speed, asset delivery is also a factor. Fortunately, with just some preparations, you can seriously speed it up. With a template like the one detailed in this post handy, you can easily set up your delivery CDN and pare down delay your customers experience.