How to use the AWS SQS CLI to receive messages

Print messages to the console. How hard can it be?

Background

I started using SQS to see events from CloudWatch as they come. My requirements were straightforward: whenever there is a message, print it to the console. Even an event-driven architecture was not required as I'm perfectly fine with polling during development.

Well, I was expecting a simple command to set up the queue then another one to fetch the messages. But, as usual, it's a bit more complicated than that. Even for this simple task of printing messages to the console as they come, I needed to do some bash scripting.

This is an overview of the challenges and lessons I've learned during the process. If you're just starting with SQS it should help you hit the ground running.

Basic operations

The first task is to set up a queue and start sending messages.

Standard queues

SQS queue operations use the queue URL to identify the queue. This stands in contrast to other AWS services as they usually use the ARN (Amazon Resource Name).

To create a new queue and store its URL, use:

QUEUE_URL=$(aws sqs create-queue --queue-name test_queue | jq -r '.QueueUrl')To delete it:

aws sqs delete-queue --queue-url $QUEUE_URLTo send a message:

aws sqs send-message --queue-url $QUEUE_URL --message-body "hi"

aws sqs send-message --queue-url $QUEUE_URL --message-body '{"key": ["value1", "value2"]}'To get a message currently in the queue:

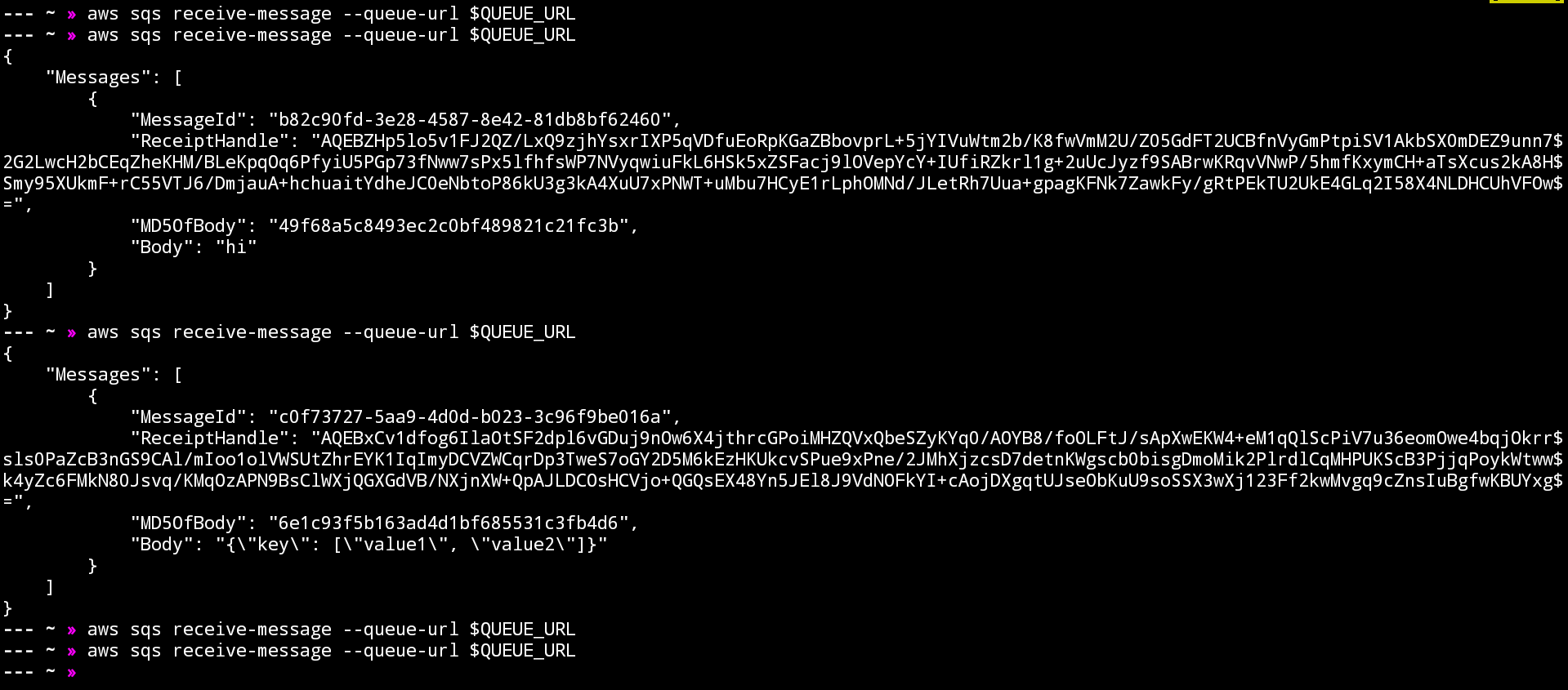

aws sqs receive-message --queue-url $QUEUE_URLIf you run this code for a few times, you'll get both messages eventually.

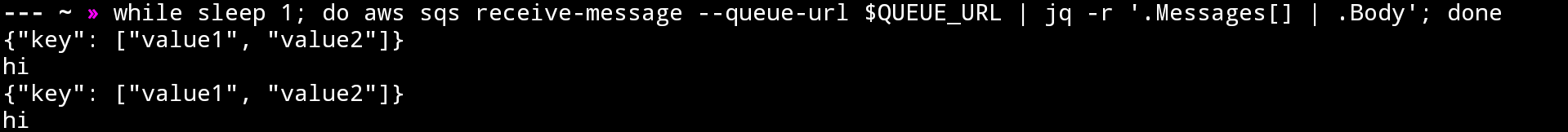

Let's make a polling loop to get the messages every second!

while sleep 1; do aws sqs receive-message --queue-url $QUEUE_URL; done

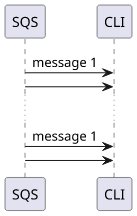

The messages are repeating ad infinity.

It turned out that receiving a message does not delete it, and worse, there is no CLI switch to do it automatically. This means you need a

separate call to the delete-messages with the ReceiptHandle parameter.

FIFO queues

If you use FIFO queues you'll notice a somewhat different behavior but that is also the result of the same mechanism. But first, let's see how to set up a FIFO queue!

To create a FIFO queue you need to change three things compared to a standard queue. First, you need to pass the "FifoQueue": "true" attribute. Then

the name must end with .fifo. The last thing is optional. By specifying the "ContentBasedDeduplication": "true" attribute then you don't need

to specify the Message Deduplication ID when you send messages.

QUEUE_URL=$(aws sqs create-queue \

--queue-name test_queue.fifo \

--attributes '{"FifoQueue": "true", "ContentBasedDeduplication": "true"}' \

| jq -r '.QueueUrl')To send messages to a FIFO queue, you need to specify the message-group-id parameter. FIFO ordering is guaranteed only inside the groups, so you can think

of it as multiple FIFO inside a single queue. If you don't want to multiplex multiple streams inside a single queue, you can use a constant value for the

message group. Using that means all the messages will be ordered.

aws sqs send-message --queue-url $QUEUE_URL --message-group-id "1" --message-body "hi"

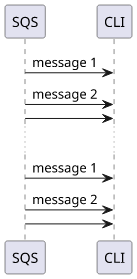

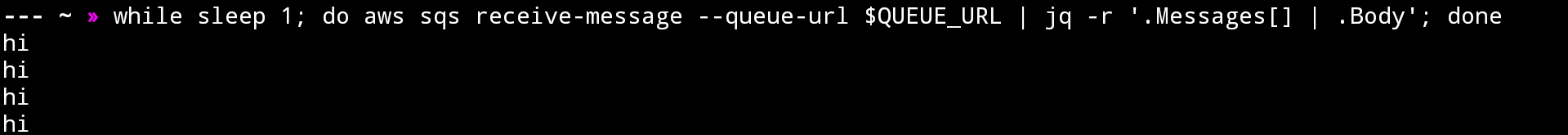

aws sqs send-message --queue-url $QUEUE_URL --message-group-id "1" --message-body '{"key": ["value1", "value2"]}'Now if you poll the queue, you'll see that only the first message is coming again and again:

This is because (surprise) FIFO ordering! As long as you don't delete the earlier message, subsequent ones won't be delivered.

Getting messages only once

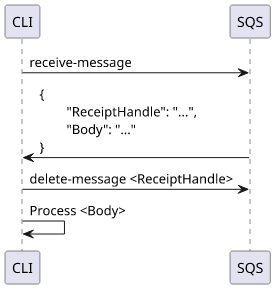

To delete the messages after receiving them, call the delete-message with the ReceiptHandle parameter:

aws sqs delete-message --queue-url $QUEUE_URL --receipt-handle <...>But here comes the problem. The receive-message returns both the ReceiptHandle and the Body and you need the former for the delete-message call

and the latter as the output.

Fortunately, by using a shell variable and a subshell to scope it, it is possible to do both:

(MSG=$(aws sqs receive-message --queue-url $QUEUE_URL); \

[ ! -z "$MSG" ] && echo "$MSG" | jq -r '.Messages[] | .ReceiptHandle' | \

(xargs -I {} aws sqs delete-message --queue-url $QUEUE_URL --receipt-handle {}) && \

echo "$MSG" | jq -r '.Messages[] | .Body')This command outputs the body of the message while taking care of deleting it from the queue. You can use this script instead of a simple receive-message.

How does it work

First, it gets a message and stores it in the MSG variable:

MSG=$(aws sqs receive-message --queue-url $QUEUE_URL)Then it needs to check if the variable is not empty, as the SQS CLI returns no output for no messages and an array otherwise. Then it extracts the ReceiptHandle:

[ ! -z "$MSG" ] && echo "$MSG" | jq -r '.Messages[] | .ReceiptHandle'Using the ReceiptHandles, to delete the messages:

(xargs -I {} aws sqs delete-message --queue-url $QUEUE_URL --receipt-handle {})Then finally extracts the Body from the message and outputs that:

echo "$MSG" | jq -r '.Messages[] | .Body')To watch for messages, wrap in a while-loop:

while sleep 1; do \

(MSG=$(aws sqs receive-message --queue-url $QUEUE_URL); \

[ ! -z "$MSG" ] && echo "$MSG" | jq -r '.Messages[] | .ReceiptHandle' | \

(xargs -I {} aws sqs delete-message --queue-url $QUEUE_URL --receipt-handle {}) && \

echo "$MSG" | jq -r '.Messages[] | .Body') \

; doneUsing this script, you can see the messages nearly real-time.

Working with JSON body

Especially when working with other AWS services it's likely that you'll work predominantly with JSON messages. This will be the Body but in escaped form:

{

"Messages": [

{

"MessageId": "...",

"ReceiptHandle": "...",

"MD5OfBody": "...",

"Body": "{\"key\": [\"value1\", \"value2\"]}"

}

]

}If you use jq then you can use the fromjson function to convert it to JSON format:

| jq -r '.Messages[] | .Body | fromjson | .key'Or you can pipe it to another jq:

| jq -r '.Messages[] | .Body' | jq '.key'Conclusion

SQS is an easy tool to see how events are unfolding during development without relying on an email subscription to an SNS topic or a Lambda function and its logs. Once you know the basics, a queue is easy to set up and with some scripting, it can be made terminal-friendly.