How to use S3 PUT signed URLs

Using upload URLs is a convenient way to reduce the bandwidth load on your backend. Learn how to use PUT URLs

PUT signed URLs

For traditional applications when a client needs to upload a file it has to go through your backend. During the migration to the cloud, that usually means your backend uploads the same data to S3, doubling the bandwidth requirements. Signed upload URLs solve this problem. The clients upload data directly to S3 in a controlled and secure way, relieving your backend.

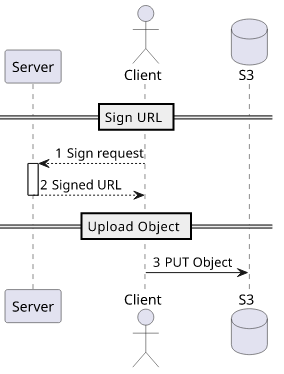

All upload URLs work in 2 steps:

- The backend signs a URL

- The client uses the URL to upload directly to S3

This way the backend has control who can upload what but it does not need to handle the data itself.

Using the JS SDK and an Express server, a PUT URL signing looks like this, validating the request first and then calling the getSignedUrl function:

app.get("/sign_put", (req, res) => {

const contentType = req.query.contentType;

// Validate the content type

if (!contentType.startsWith("image/")) {

throw new Error("must be image/");

}

const userid = getUserid(); // some kind of auth

// check if the user is authorized to upload!

const url = s3.getSignedUrl("putObject", {

Bucket: process.env.BUCKETNAME, // make it configurable

Key: `${userid}-${getRandomFilename()}`, // random with user id

ContentType: contentType,

// can not set restrictions to the length of the content

});

res.json({url});

});As with other forms of signed URLs, signing means the signer does the operation. This means that the backend needs access to perform the operation.

Also, this operation is entirely local. There is no call to AWS to generate the signed URL and there are no checks whether the keys used for signing grant access to the operation.

Parameters

Bucket

The bucket to upload to. Don't forget to add a policy that grants upload access to the bucket.

const url = s3.getSignedUrl("putObject", {

Bucket: ...,

};CORS

As the client will send requests to the bucket endpoint it needs CORS (Cross-Origin Resource Sharing) set up:

aws s3api put-bucket-cors \

--bucket $BUCKETNAME \

--cors-configuration '{"CORSRules": [{"AllowedOrigins": ["*"], "AllowedMethods": ["PUT"], "AllowedHeaders": ["*"]}]}'Without it, the client will not be able to issue a PUT to the bucket.

But that is only the case for browsers, as other clients don't enforce the same origin policy (like curl).

Key

This is the object name that will be uploaded. Do not let the client influence it. Generate a sufficiently random string and use that for the key.

const getRandomFilename = () => require("crypto").randomBytes(16).toString("hex");

const url = s3.getSignedUrl("putObject", {

...

Key: getRandomFilename(),

});ContentType

This sets the Content-Type of the uploaded object.

const url = s3.getSignedUrl("putObject", {

...

ContentType: contentType,

});There are 2 scenarios here. The first is when you fix the content type, for example to image/jpeg:

const url = s3.getSignedUrl("putObject", {

...

ContentType: "image/jpeg",

});But what if the client wants to upload a png? In that case, you need to get the intended content type from the client during signing and validate it:

const contentType = req.query.contentType;

if (!contentType.startsWith("image/")) {

throw new Error("invalid content type");

}Do not allow the client to fully specify the content type. Either make it constant or validate it before signing the request.

Content length

Unfortunately, there is no way to put limits on the file size with PUT URLs. There is a Content-Length setting, but the JS SDK does not support it.

This opens up an attack where a user requests a signed URL and then uploads huge files. Since you'll pay for both the network costs as well as the storage, this can drain your budget.

The only thing you can do is track who uploaded what (for example, by including the user id in the key) so that at least you can stop it.

Metadata

You can add arbitrary key-value pairs to the object which will be stored in the object metadata. This is a great place to keep track of who uploaded the object for later investigation.

const url = await s3.getSignedUrl("putObject", {

// ...

Metadata: {

"userid": userid, // tag with userid

},

});Authorization

The server must check if a user is allowed to upload files. Since with the possession of a signed URL anyone can upload files, there is no other check performed on the AWS side.

Do not let anonymous users get signed URLs.

Frontend-side

On the frontend, just fetch the signed URL and issue a PUT request with the file you want to upload:

const {url} = await (await fetch(`/sign_put?contentType=${file.type}`)).json();

await fetch(url, {

method: "PUT",

body: file,

})Security considerations

As with all features, the fewer things clients can set the better. The Key should be set by the server with sufficient randomness. That prevents enumeration attacks

in case you don't protect downloading files by other means.

The content type should be locked down as much as possible. For an endpoint where users can upload avatars, image/* is plenty.

Also, make sure you can track each object back to who uploaded it. In the case of PUT URLs, it usually means using the filename.

Conclusion

Signed upload URLs are a cloud-native way of handling file uploads that can scale indefinitely. But you always need to check the parameters before you do the signing as that can easily lead to security problems.