How to maintain database consistency in DynamoDB

How to use conditions in write transactions to guarantee a consistent state

Transactions in DynamoDB

DynamoDB supports transactions with full ACID (Atomicity, Consistency, Isolation, Durability) properties. ACID is the gold standard that all database engines aim for. But if you look behind the headlines, transactions in DynamoDB are nothing like transactions in SQL databases. DynamoDB is still a key-value database with all the limitations it comes with.

Transactions in DynamoDB are either read-only or write-only. That means you can't atomically read some data, make some decision based on that, and write the changes. The read in the start and the write in the end are two separate atomic operations and the latter can not be sure that the database state hasn't changed in the middle.

Conditions

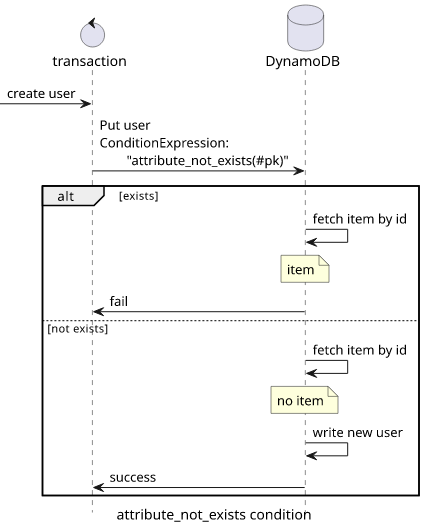

To solve this, DynamoDB supports conditions for the write operations. These are expectations on the database state that must be true for the write operation to be effective. If a precondition fails, the whole transaction is cancelled. Write-only transactions with carefully considered conditions are powerful constructs that you can use to ensure database consistency.

Let's see an example how conditions work!

This transaction item adds an item to a table but only if there is no item with that key:

Put: {

TableName: GROUP_TABLE,

ConditionExpression: "attribute_not_exists(#pk)",

ExpressionAttributeNames: {

"#pk": "ID",

},

Item: {

ID: {S: id},

num_users: {N: "0"},

}

},Internally, DynamoDB retrieves the element first by its key. Then it runs the ConditionExpression. In this table, the ID is the hash key so it

exists for all items. If the attribute does not exist then the item is not in the database.

Read and set

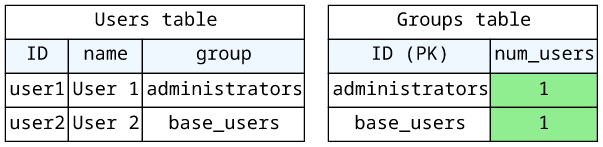

In practice, most write transactions rely on previously read data. For example, if a user can belong to a group, you might need to first find the group and then insert the user. Or you might want to keep track of how many users are in the group. When you delete a user, you need to find its membership first.

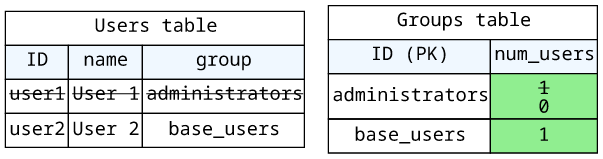

The problem with DynamoDB is that the read operation (finding out what group the user belongs to) and the write transaction (deleting the user and decreasing the counter) are two separate atomic operations. If a different transaction changed the items between the two calls that could easily put the database to an inconsistent state.

Let's see some code!

The num_users in the Groups table keeps track of the number of users in that group. To delete a user using its ID, we first need to know what group the

user belongs to:

const currentUser = unmarshall((await client.send(new GetItemCommand({

TableName: USER_TABLE,

Key: {

ID: {S: id}

}

}))).Item);The write transaction needs to do 2 things:

- delete the user

- decrease the

num_usersfor the group

Without caring about consistency, the transaction looks like this:

// broken, only for demonstration

TransactItems: [

{

Delete: {

TableName: USER_TABLE,

Key: {

ID: {S: id}

},

},

},

{

// decrease num_users by 1

Update: {

TableName: GROUP_TABLE,

UpdateExpression: "ADD #num_users :minusone",

Key: {

ID: {S: currentUser.group},

},

ExpressionAttributeNames: {

"#num_users": "num_users",

},

ExpressionAttributeValues: {

":minusone": {N: "-1"},

}

},

}

]

The above code works fine as long as it's the only thing that changes the database state. It correctly reads the current user object and it atomically deletes

and changes the two items. But it's a broken implementation. What happens if the user is deleted between the read and the write? Or a user is moved to a new

group? In that case, the original group's num_users will be decreased and it won't accurately reflect the number of users.

Database consistency

Consistency means the data adheres to constraints (formal and informal) and if one information is stored in multiple times then they all agree. If

the num_users does not accurately reflect the number of user items then the database is in an inconsistent state.

Databases maintain consistency through transactions. Every transaction should move the database from a consistent state to another consistent state. And since it starts in a consistent state, if all transactions follow this property then it will always stay consistent. But if even one transaction succeeds that moves the database to an inconsistent state, all further guarantees are nullified. Because of this, it is extremely important to make sure that no transaction can break the consistency.

How to make sure every transaction ends up in a consistent state?

Databases have a varying set of constraints that you can use and they will automatically enforce. For example, since DynamoDB is a key-value store, it guarantees that at most one item can have a given key. SQL databases usually support unique constraints, so they can guarantee that a given column's value is unique in the table. If a transaction tries to add a duplicate value, it will fail. This is great, as you can define the constraints when you create the table and the database engine will do the rest. Unfortunately, DynamoDB offers a lot fewer options for this.

Maintaining consistency through conditions

The primary tool to enforce consistency in DynamoDB is with conditions. The idea here is that the write transaction can check that the database is in the state it expects it to be and only then do the changes.

Let's add the conditions to the user delete example above!

First, to delete a user it needs to exist. If a separate process deletes the user then the second delete should fail. Moreover, the group should also exist.

But it's still not enough. If the user is moved to a different group then both the user and the group exist but the database still ends up in an inconsistent state.

So second, we need to check if the user's group is the one that was returned by the read operation.

Here's the transaction, implementing both checks:

TransactItems: [

{

Delete: {

TableName: USER_TABLE,

// user exists and in the expected group

ConditionExpression: "attribute_exists(#pk) AND #group = :group",

ExpressionAttributeNames: {

"#pk": "ID",

"#group": "group",

},

ExpressionAttributeValues: {

":group": {S: currentUser.group},

},

Key: {

ID: {S: id}

},

},

},

{

// decrease num_users by 1

Update: {

TableName: GROUP_TABLE,

UpdateExpression: "ADD #num_users :minusone",

// group exists and has users

ConditionExpression: "attribute_exists(#pk) AND #num_users > :zero",

Key: {

ID: {S: currentUser.group},

},

ExpressionAttributeNames: {

"#pk": "ID",

"#num_users": "num_users",

},

ExpressionAttributeValues: {

":minusone": {N: "-1"},

":zero": {N: "0"}

}

},

}

]The get-and-set-with-check pattern

In practice, most data change follows this pattern:

- The code reads the database state

- A write transaction is sent with the necessary changes

- In it, conditions make sure the database is in an expected state

The tricky part is the third point. It needs a lot of planning to get it right, and it's usually not apparent in a development environment that something is missing.

Examples

Keeping accurate counts

This comes up often in DynamoDB data modelling. Since there is no efficient COUNT(*) operation in DynamoDB, the only thing you can do is to make sure all

that all the individual transactions keep a counter up-to-date.

Foreign keys

A related example is enforcing foreign keys. To prevent deleting a referenced item, you need to keep an accurate counter that keeps track of how many items are referencing it. And for that, you need to make sure all transactions that change an item keeps the counter up-to-date.

Enforcing state in referenced elements

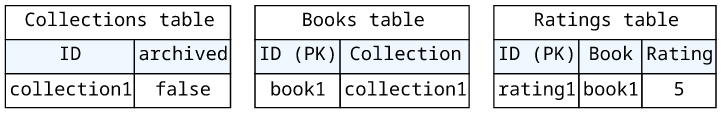

Another common scenario is when the read part needs to fetch multiple elements to check a condition in a distant item. Let's say users can rate books in a collection, but only if the collection is not archived.

To add a rating to a book (with a rating and a book ID), the read part needs to fetch 2 items:

- The book for the rating

- The collection for the book

Then the write part needs to check these things:

- The book exists and is in the expected collection

- The collection exists and is not archived

If all of these hold then the database is not modified in a way that would make this transaction move the database to an inconsistent state.

Notice that it's not enough to just check the end of the chain (the collection). This is because the book might be added to a different collection that might be archived before the write transaction comes in.

Conclusion

The primary tool to guarantee data consistency is the condition expression for transaction elements. Since DynamoDB does not support complex constraints on the tables, it falls to the developer to make sure that every single transaction verifies the database state before making any changes.