How to implement file uploads to an AppSync API

Upload files to a serverless AppSync API using S3 signed URLs

File uploads to a serverless API

Uploading files directly to a serverless API only works for small sizes. This is because these APIs enforce the "small and quick" model for requests and limit the data and duration of uploads. While uploading small files can be done by using a size-limited string argument, it is not a generic solution as it puts an upper limit on the file sizes. This means it is unsuitable for larger files such as videos or even high-res images.

The solution for serverless-friendly file uploads is to use signed URLs. This requires some additional coding but the idea is simple. The client requests an upload URL from the API and then uses that to send a file to a dedicated object storage. When a file is uploaded, the client goes back to the API that then can update a database or do any additional processing.

In this article, we'll see how to implement this with an AppSync-based serverless API. We'll use S3 to store the files and DynamoDB to store references to them.

Generate the signed URL

First, the schema needs a query that returns the information for the upload:

type Query {

getUploadURL: AWSJSON!

}

Notice that it's a JSON and not just a simple URL. This is because the client will need to post a FormData with several fields.

On the client-side, request the URL:

const uploadData = JSON.parse((await query(`

query MyQuery {

getUploadURL

}

`, {})).getUploadURL);

On the backend, an AppSync resolver for this field calls a Lambda function:

export function request(ctx) {

return {

version: "2018-05-29",

operation: "BatchInvoke",

payload: {

type: "upload",

userid: ctx.identity.sub,

}

};

}

Then the Lambda does the signature:

import {S3Client} from "@aws-sdk/client-s3";

import {createPresignedPost} from "@aws-sdk/s3-presigned-post";

import crypto from "node:crypto";

export const handler = async ({userid}) => {

const client = new S3Client();

const key = crypto.randomUUID();

const data = await createPresignedPost(client, {

Bucket: process.env.Bucket,

Key: "staging/" + key,

Fields: {

"x-amz-meta-userid": userid,

"x-amz-meta-key": key,

},

Conditions: [

["content-length-range", 0, 10000000], // content length restrictions: 0-10MB

["starts-with", "$Content-Type", "image/"], // content type restriction

["eq", "$x-amz-meta-userid", userid], // tag with userid <= the user can see this!

["eq", "$x-amz-meta-key", key],

]

});

return {data};

}

There are many subtle details here. First, the entire key of the object is defined by the backend. This is a best practice: the fewer parts the client can influence the fewer problems they can cause.

Then the key starts with a staging/ prefix. This is another best practice: since the client might not call the mutation we'll define later the image might go unused. Having a common prefix for these "uploaded but not processed" files allows a lifecycle rule to clean them up.

Then the Conditions part sets some restrictions on the file that can be uploaded. The content-length-range defines the file size, the

$Content-Type restricts the file type to be an image, then the $x-amz-meta- adds some compulsory metadata.

After the client gets the result of the Lambda, it can upload the file to S3:

const formData = new FormData();

// content type

formData.append("Content-Type", file.type);

// data.fields

Object.entries(uploadData.fields).forEach(([k, v]) => {

formData.append(k, v);

});

// file

formData.append("file", file); // must be the last one

// send it

const postRes = await fetch(uploadData.url, {

method: "POST",

body: formData,

});

if (!postRes.ok) {

throw postRes;

}

Processing the upload

After the file is uploaded to S3 the backend usually needs to process it in some way, such as add an entry to a database pointing to the new content. In this example, it adds a row to a DynamoDB table.

Why require a separate call from the client instead of using an event-driven approach on the backend-side such as Event Notifications? Latency is the key here: event notifications can take a long time:

Typically, event notifications are delivered in seconds but can sometimes take a minute or longer.

The schema needs a mutation for the operation:

type Mutation {

addImage(key: ID!): Image!

}

Then the client calls it with the key of the uploaded object:

const addedImage = (await query(`

mutation MyQuery($key: ID!) {

addImage(key: $key) {

key

url

public

}

}

`, {key: uploadData.fields["x-amz-meta-key"]})).addImage;

On the backend-side, a Lambda is run doing a series of steps.

First, it checks that the user that uploaded the object is the same as the one who calls this mutation:

export const handler = async ({key, userid}) => {

const {Bucket, ImageTable} = process.env;

const s3Client = new S3Client();

const ddbClient = new DynamoDBClient();

const obj = await s3Client.send(new HeadObjectCommand({

Bucket,

Key: `staging/${key}`,

}));

if (obj.Metadata.userid !== userid) {

console.error(`User is different than the uploading user. User: ${userid}, uploading user: ${obj.Metadata.userid}`);

throw new Error("");

}

// ...

}

Then it moves the object out of the staging area using a copy and a delete operation:

export const handler = async ({key, userid}) => {

// ...

await s3Client.send(new CopyObjectCommand({

Bucket,

Key: key,

CopySource: encodeURI(`${Bucket}/staging/${key}`),

}));

await s3Client.send(new DeleteObjectCommand({

Bucket,

Key: `staging/${key}`,

}));

// ...

}

Then inserts the value in the database:

export const handler = async ({key, userid}) => {

// ...

const isPublic = false;

await ddbClient.send(new PutItemCommand({

TableName: ImageTable,

Item: {

key: {S: key},

userid: {S: userid},

public: {BOOL: isPublic},

"userid#public": {S: userid + "#" + isPublic},

added: {N: new Date().getTime().toString()},

}

}));

const res = await ddbClient.send(new GetItemCommand({

TableName: ImageTable,

Key: {

key: {S: key},

},

}));

return unmarshall(res.Item);

};

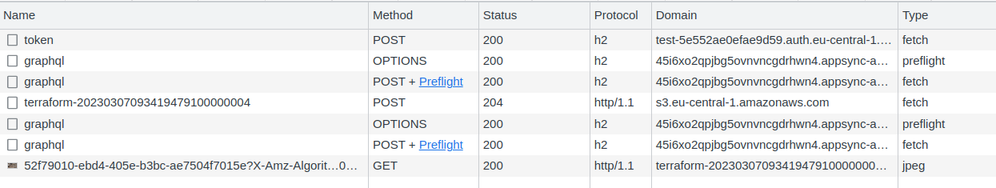

The full flow as seen by the client:

Conclusion

Properly implementing file uploads is not easy and it requires a complex multi-step process between the frontend and the backend. But using signed URLs provides a robust and reliable way to handle files of any sizes.