How to cache ssm.getParameter calls in a Lambda function

Lower costs by staying in the standard SSM Parameter Store throughput limits

SSM Parameter store

Storing secrets in the Systems Manager Parameters Store enables you to decouple the application logic from the secrets. This means the person, or process, who is

doing deployments does not need to know the value of the secret, only the name of the parameter. This allows securely storing things like access keys to

third-party systems, database passwords, and encryption keys. With correct permissions, the Lambda function can read the value of the parameter by making

a getParameter call to the SSM service.

import {SSMClient, GetParameterCommand} from "@aws-sdk/client-ssm";

// reading a secret value from the SSM Parameters Store

const secretValue = new SSMClient().send(new GetParameterCommand({

Name: process.env.PARAMETER,

WithDecryption: true,

}));

Environment variables are a similar construct that allows passing arbitrary values to a function. But separate storage for secrets allows better decoupling. Everybody who can deploy the function by definition needs to know the values, which might not be acceptable.

// a secret is passed as an environment variable

const secretValue = process.env.SECRET_VALUE

But while environment variables scale with the function, SSM does not. It has a maximum throughput, defined in transactions per second (TPS), which is only 40 by default. Since Lambda can handle traffic several orders of magnitude, this can be a bottleneck.

AWS reacted like a good salesman, adding an option to increase the limit to 1000 TPS for a higher cost. This should be enough for almost all use-cases.

But why pay for something that you can solve yourself?

How to add parameters

To add a parameter, go to the AWS Systems Manager service and select the Parameter Store item under Application Management. This lists all the parameters defined in the region and you can add new ones.

The Parameter Store is a simple key-value store. Parameters have a name and a value associated. The SecureString type is a String encrypted with KMS. This

allows the WithDecryption parameter that allows getting only the cyphertext.

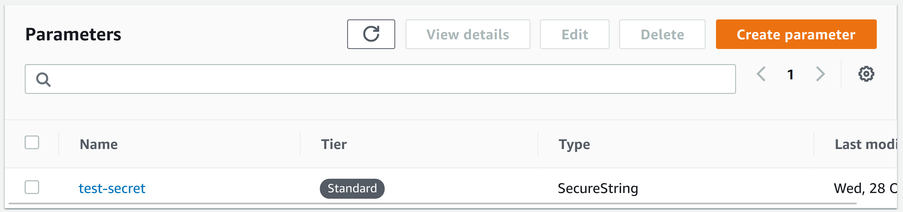

Here's a secret with the name test-secret that is a SecureString:

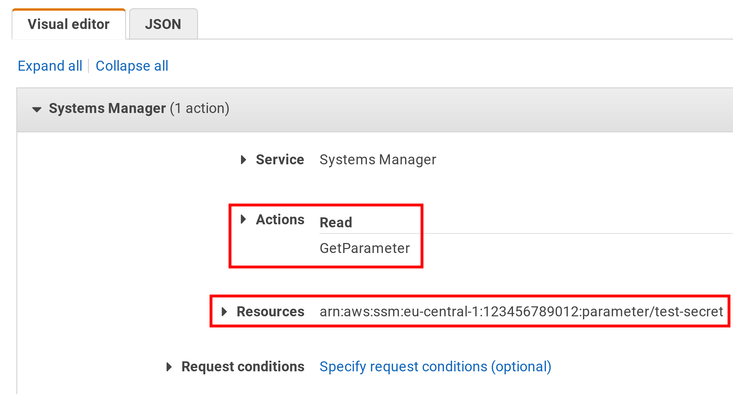

By default, the Lambda function can not read this value. Let's add a policy that grants access:

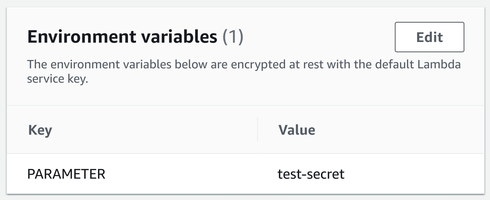

Finally, the function needs to know the name of the parameter. This avoids the need to hardcode this value and it allows deploying identical environments. An environment variable is a good place to store it:

This is all the function needs to access the secret. Whenever the value is needed, just read the name from the environment, then issue a getParameter call:

import {SSMClient, GetParameterCommand} from "@aws-sdk/client-ssm";

export const handler = async (event) => {

const secretValue = new SSMClient().send(new GetParameterCommand({

Name: process.env.PARAMETER,

WithDecryption: true,

}));

};

Caching SSM calls

The above code issues the ssm.getParameter call every time the function is run. This limits the scalability of the function to the maximum TPS of the

SSM service, which is by default 40. Let's see how we could do better and issue a lot fewer requests to the service!

Lambda reuses function instances. The first request starts the code on a new machine, but subsequent calls reach the same running instance. This enables per-instance storage, as all the variables that are declared outside the handler function are still accessible during the next call.

const variable = ""; // <- shared between calls

export const handler = async (event) => {

const localVariable = ""; // <- per-request

};

This allows an easy caching mechanism. Initialize an object that is shared between the calls, and in the handler function use it to interface with the SSM. The cache object can then store the last retrieved value and can decide when to refresh it.

Here's a caching function that implements this:

const cacheSsmGetParameter = (params, cacheTime) => {

let lastRefreshed = undefined;

let lastResult = undefined;

let queue = Promise.resolve();

return () => {

const res = queue.then(async () => {

const currentTime = new Date().getTime();

if (lastResult === undefined || lastRefreshed + cacheTime < currentTime) {

lastResult = await new SSMClient().send(new GetParameterCommand(params));

lastRefreshed = currentTime;

}

return lastResult;

});

queue = res.catch(() => {});

return res;

};

};

This builds on the async function serializer pattern as while getting the parameter returns a Promise, it runs only one call at a time. This makes sure that when the value is fetched from SSM it will happen only once even if multiple calls are waiting for the result.

The function returns another function that fetches the parameter's value:

const cacheSsmGetParameter = (params, cacheTime) => {

// ...

};

const getParam = cacheSsmGetParameter({

Name: process.env.PARAMETER,

WithDecryption: true,

}, 30 * 1000); // 30 seconds

export const handler = async (event) => {

const param = await getParam();

return param;

};

Cache time

Cache time is a tradeoff between freshness and efficiency. The longer the value is in the cache the more time it needs to pick up the changes, but it also makes fewer calls.

Also, don't forget that this caching is per instance. While the Lambda service reuses instances whenever possible, it also liberally starts new ones and those come with separate memory storages. This makes cache time calculations dependent on the number of instances as well as the function invocations per second.

Let's say a huge application uses 1000 instances at a given time. This should be a reasonable upper limit. In this case, the 40 TPS limit of the standard throughput of the SSM translates into 25 seconds cache time.

Based on this back-on-the-envelope calculation, a cache time between 30 seconds and 5 minutes should be a good value.