How to auto-disable leaked IAM credentials

Attach the AWSDenyAll policy when a user makes a request that is denied

I'm building a personal backup solution based on AWS S3. Part of that is giving access to a local process running on my laptop and phone to read and write the contents of a bucket. The way to do it is to create an IAM user, generate an access key for it, and store it locally.

But then I started thinking how best to secure these credentials?

In this article I'll describe a mechanism that automatically disables an IAM user when it makes a request that is denied. You'll learn in which cases this is a good solution, and what are the alternatives.

What does not work

First, let's talk about why I chose an IAM user and why I'm concerned about its keys getting leaked.

Protecting client secrets locally

The first line of defense is to protect the keys locally. This includes several steps, such as the script is owned by root and is not editable to the user, and using systemd credentials to store them encrypted:

# encrypting credentials

systemd-creds encrypt --name=aws_secret_access_key plaintext.txt aws_secret_access_key

# using in a unit file

LoadCredentialEncrypted=aws_secret_access_key:/etc/restic_configs/%i/aws_secret_access_keyBut no matter how much security is put in place on the device itself, it does not change the fundamental truth: it is stored on the device in a recoverable way. There can be a breach that gives an attacker root access and then they can steal the keys.

Temporary credentials

The root of the problem is that the access key is permanent: if somebody can steal it, it's gone for good. So, why not use temporary credentials? After all, using roles instead of users is a best practice.

For example, a Lambda function needs access to read objects from an S3 bucket. Permission-wise it would be a role, attached to the Lambda function, and given the necessary permission. Then the Lambda runtime will automatically fetch the short-term keys and make them available to the function:

// Look, no credentials!

import {S3Client, GetObjectCommand} from "@aws-sdk/client-s3";

const object = await new S3Client().send(new GetObjectCommand({

Bucket,

Key,

}));So, why something similar can't work in my case?

Short-term credentials still reqire a trust anchor: something that allows the issuance of short-term credentials. In the case of the Lambda function, this trust comes from configuring the role with the function ("passing the role"):

But my laptop is outside the AWS cloud, so there is no inherent trust anchor. That's why it needs some secret stored on-device.

What about Roles Anywhere?

Roles Anywhere is a newer feature of AWS that uses certificates to issue short-term AWS credentials. A certificate can be short-term, so why it won't work here?

This only adds another layer but won't change the big picture: something permanent needs to be stored in the device and it does not matter if that is the AWS key itself or a certificate that can be used to get these keys.

Two types of IAM users

I found that there are two types of iam users: one used by people, and one used by machines. For example, a new developer joins the team and you want to give them access to some resources. The easy way is to create an IAM user, attach some policies, then allow console access. Of course, configuring AWS SSO is a more scalable way, but most teams starting out with AWS usually start with a single account and with IAM users.

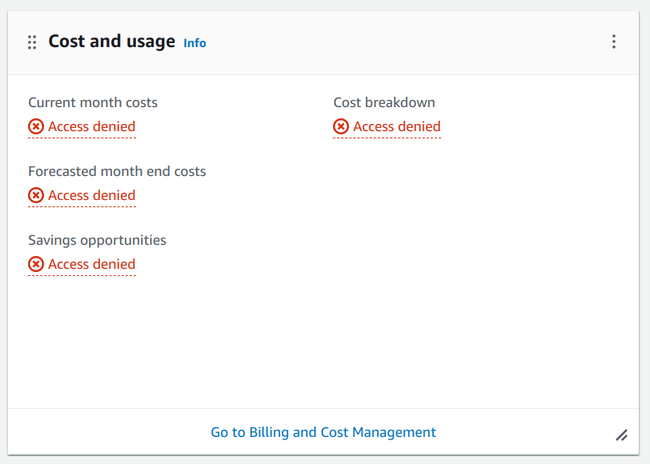

Requests sent by an actual person is inherently messy. Clicking around the console or trying out different commands with the CLI will try to access resources outside the allowed set.

Just opening the management console fetches data from a rather wide set of services:

As a result, denied requests are normal for humans.

The other type of IAM user is a machine user, such as in my case. I write the code that interacts with AWS and I define what the code is doing. If I define that it only reads an object from S3 then it won't go around and fetch other things:

import {S3Client, GetObjectCommand} from "@aws-sdk/client-s3";

const object = await new S3Client().send(new GetObjectCommand({

Bucket: process.env.BUCKET,

Key,

}));That means if I configure the policies correctly for the exact use-case then under normal circumstances no requests will be denied.

This is the root of the idea behind this solution: when a machine user makes a request that is denied that means something is wrong, most likely the credentials are stolen. And for damage control, an automation can disable the user's access.

GetCallerIdentity

There is a very special action in IAM: the sts:GetCallerIdentity. This is used to get information about the current user, such as its account ID and its

ARN:

{

"UserId": "AIDA3A...",

"Account": "7572...",

"Arn": "arn:aws:iam::7572...:user/testing-User-SdzLVYqjlzW4"

}It is special because it can not be denied, and is usually used to find out if the AWS CLI is configured properly.

Since it prints out information about the account, it is usually the first thing somebody would run when discovering an AWS credential. That makes it a good indicator of breach besides the access denied notification. And, fortunately, it is logged in CloudTrail:

{

"eventVersion": "1.08",

"userIdentity": {

"type": "IAMUser",

"..."

},

"eventTime": "2024-08-08T10:50:33Z",

"eventSource": "sts.amazonaws.com",

"eventName": "GetCallerIdentity",

"..."

}Architecture

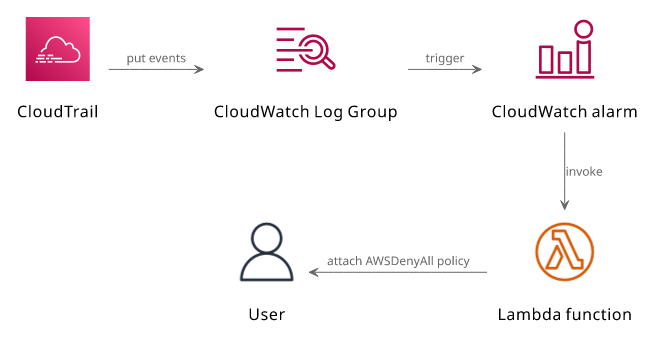

So, how to build auto-remediation?

The core of the solution is CloudTrail. It records the requests going to the AWS account and it writes these to S3 an CloudWatch Logs.

When we have the logs in CloudWatch, the next is to put a metric filter that tracks requests that were denied and made by the user. Since each log event is a JSON writing a filter is easy.

With a metric that tracks the number of denied requests, an alarm can trigger a Lambda function. This is the auto-remediation part: the function can attach a policy to the user that denies all future requests.

One downside is latency as CloudTrail events are not delivered instantly. In practice, the user is disabled in a few minutes after the offending request was made.

Implementation

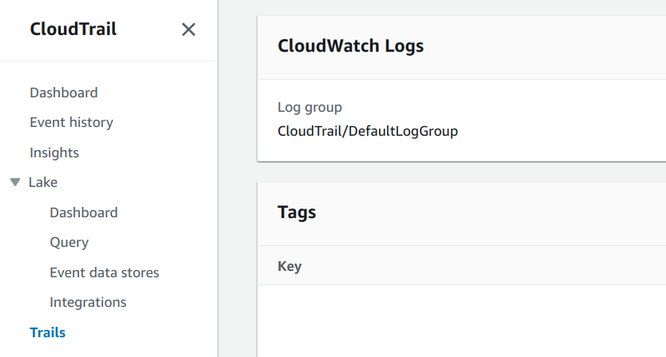

In initial requirement is that a trail is configured with a CloudWatch logs group:

As this is configured separately, it is a parameter for the CloudFormation stack:

Parameters:

CloudTrailLogGroup:

Type: String

Description: "The CloudWatch log group where CloudTrail writes the logs"Next, a metric filter writes a metric when access denied errors are encountered for this user:

LoginMetricFilter:

Type: AWS::Logs::MetricFilter

Properties:

LogGroupName: !Ref CloudTrailLogGroup

FilterPattern: !Sub '{($.errorCode = "AccessDenied" || $.eventName = "GetCallerIdentity") && $.userIdentity.arn = "${User.Arn}"}'

MetricTransformations:

- MetricValue: 1

MetricNamespace: !Sub "${AWS::StackName}"

MetricName: !Sub "AccessDenieds-${User}"Note the FilterPattern: the errorCode and the userIdentity is checked where the latter need to match the user's ARN.

Here's an example event:

{

"eventVersion": "1.08",

"userIdentity": {

"type": "IAMUser",

"principalId": "AIDAUB3O2IQ5EEQKVGBG2",

"arn": "arn:aws:iam::278868411450:user/test-User-s4G9yXBxXESF",

"accountId": "278868411450",

"accessKeyId": "AKIAUB3O2IQ5MQXZAOHI",

"userName": "test-User-s4G9yXBxXESF"

},

"eventTime": "2024-07-25T10:52:35Z",

"eventSource": "lambda.amazonaws.com",

"eventName": "ListFunctions20150331",

"awsRegion": "eu-central-1",

"sourceIPAddress": "81.183.220.118",

"userAgent": "aws-cli/2.17.14 md/awscrt#0.20.11 ua/2.0 os/linux#6.9.10-arch1-1 md/arch#x86_64 lang/python#3.12.4 md/pyimpl#CPython cfg/retry-mode#standard md/installer#source md/distrib#arch md/prompt#off md/command#lambda.list-functions",

"errorCode": "AccessDenied",

"errorMessage": "User: arn:aws:iam::278868411450:user/test-User-s4G9yXBxXESF is not authorized to perform: lambda:ListFunctions on resource: * because no identity-based policy allows the lambda:ListFunctions action",

"requestParameters": null,

"responseElements": null,

"requestID": "66e4c7f5-7156-4216-b688-236ffd132e58",

"eventID": "3f882e4b-cb95-4fdd-acb2-236344b89b9c",

"readOnly": true,

"eventType": "AwsApiCall",

"managementEvent": true,

"recipientAccountId": "278868411450",

"eventCategory": "Management",

"tlsDetails": {

"tlsVersion": "TLSv1.3",

"cipherSuite": "TLS_AES_128_GCM_SHA256",

"clientProvidedHostHeader": "lambda.eu-central-1.amazonaws.com"

}

}Then an alarm is configured when this metric goes above 0:

AccessDeniedAlarm:

Type: AWS::CloudWatch::Alarm

Properties:

ComparisonOperator: GreaterThanThreshold

EvaluationPeriods: 1

Threshold: 0

Namespace: !Sub "${AWS::StackName}"

MetricName: !Sub "AccessDenieds-${User}"

Statistic: Maximum

Period: 60

TreatMissingData: notBreaching

ActionsEnabled: true

AlarmActions:

- !GetAtt LambdaFunction.ArnLambda

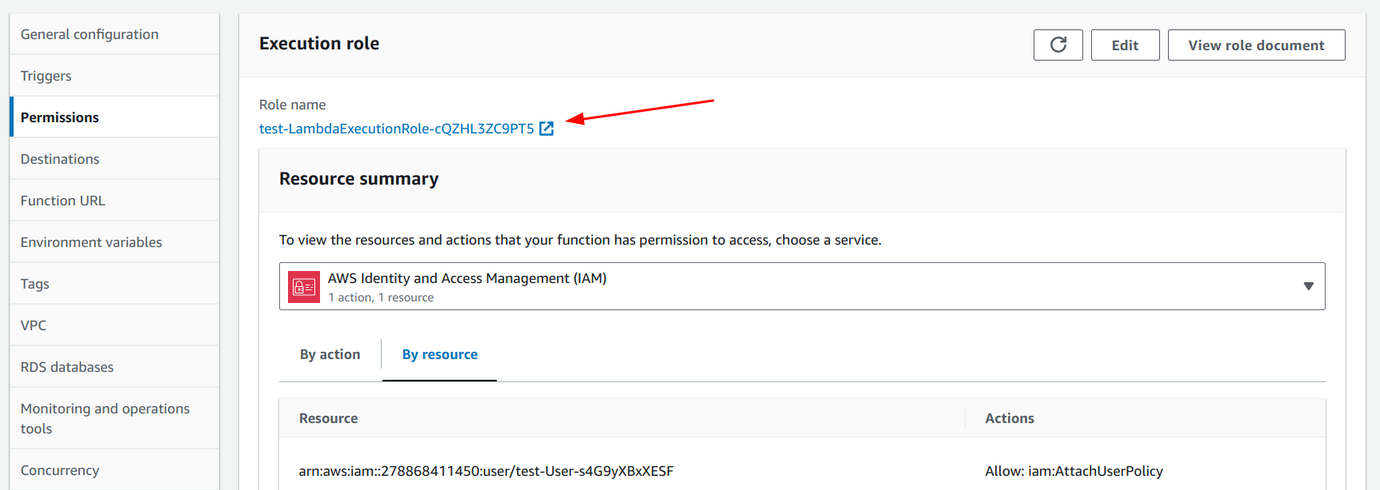

The Lambda function needs several resources.

First, a log group:

LambdaLogGroup:

Type: AWS::Logs::LogGroup

Properties:

RetentionInDays: 365Then a role that allows logging and attaching the AWSDenyAll policy to the user:

LambdaExecutionRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Principal:

Service:

- lambda.amazonaws.com

Action:

- sts:AssumeRole

Policies:

- PolicyName: allow-logs

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- 'logs:PutLogEvents'

- 'logs:CreateLogStream'

Resource: !Sub "${LambdaLogGroup.Arn}:*"

- Effect: Allow

Action:

- 'iam:AttachUserPolicy'

Resource: !GetAtt User.Arn

Condition:

StringEquals:

"iam:PolicyARN": "arn:aws:iam::aws:policy/AWSDenyAll"Then the function itself:

LambdaFunction:

Type: AWS::Lambda::Function

Properties:

Runtime: nodejs20.x

Role: !GetAtt LambdaExecutionRole.Arn

Handler: index.handler

ReservedConcurrentExecutions: 1

Timeout: 30

Code:

ZipFile: |

const {IAMClient, AttachUserPolicyCommand} = require("@aws-sdk/client-iam");

exports.handler = async (event) => {

const res = await new IAMClient().send(new AttachUserPolicyCommand({

UserName: process.env.UserName,

PolicyArn: "arn:aws:iam::aws:policy/AWSDenyAll",

}));

console.log(res);

};

LoggingConfig:

LogGroup: !Ref LambdaLogGroup

LogFormat: JSON

Environment:

Variables:

UserName: !Ref UserFinally, a permission for the alarm to call this function:

InvokeLambdaPermission:

Type: AWS::Lambda::Permission

Properties:

FunctionName: !GetAtt LambdaFunction.Arn

Action: "lambda:InvokeFunction"

Principal: "lambda.alarms.cloudwatch.amazonaws.com"

SourceArn: !GetAtt AccessDeniedAlarm.ArnTesting

Let's see how it works in action!

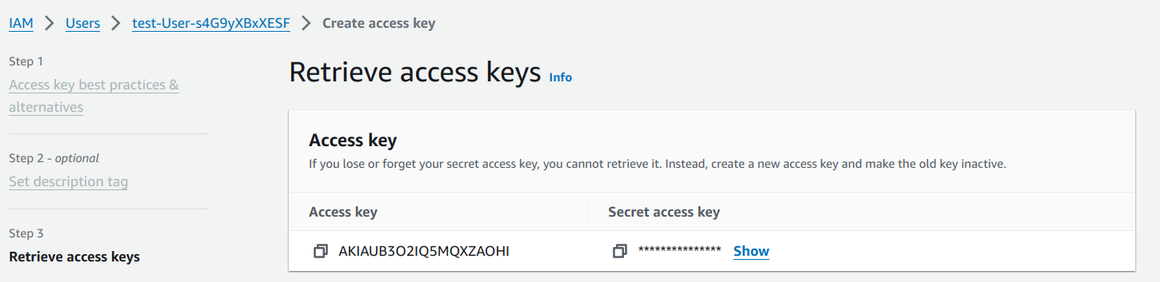

Create an access key for the user for testing:

The user can list S3 buckets:

$ aws s3 ls

2020-06-22 21:11:15 cf-templates-c2cqm2z6vtrr-eu-central-1

2019-06-29 09:40:07 cf-templates-c2cqm2z6vtrr-us-east-1But listing Lambda functions is denied:

$ aws lambda list-functions

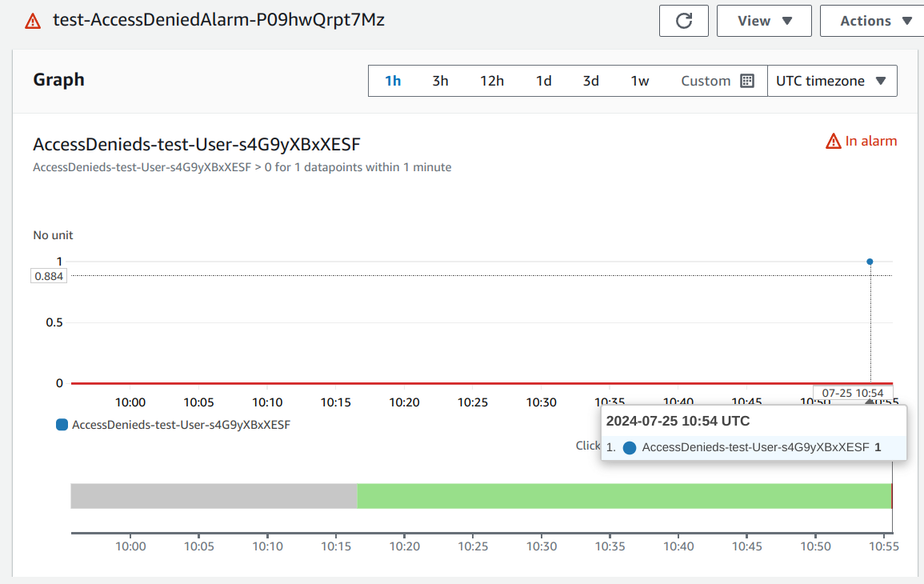

An error occurred (AccessDeniedException) when calling the ListFunctions operation: User: arn:aws:iam::278868411450:user/test-User-s4G9yXBxXESF is not authorized to perform: lambda:ListFunctions on resource: * because no identity-based policy allows the lambda:ListFunctions actionThis triggers the alarm in a few minutes:

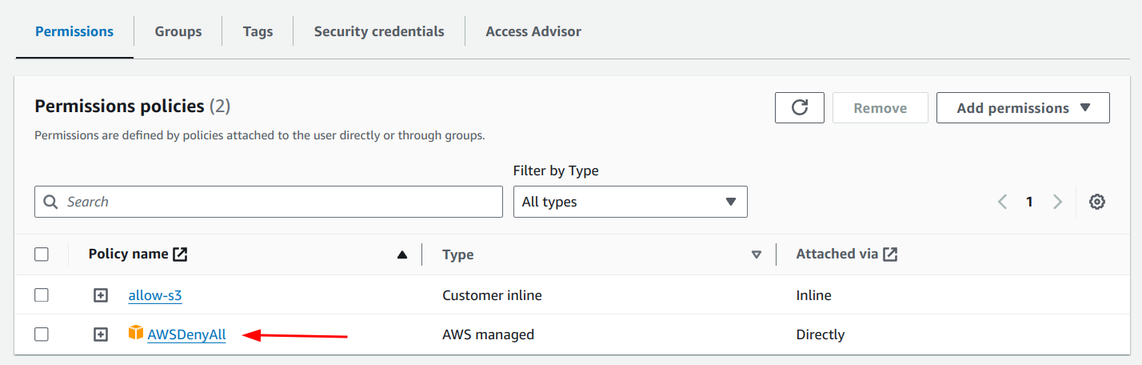

Now the AWSDenyAll policy is attached automatically to the user:

And even the S3 action is denied:

$ aws s3 ls

An error occurred (AccessDenied) when calling the ListBuckets operation: Access Denied