How CloudFront speeds up content delivery

How edge locations and a dedicated network make for faster connections

Let's see a typical web-based architecture! Usually, the app is hosted in an AWS region, using EC2 servers or Lambdas behind an API Gateway. When visitors browse the page the connections go all the way to this region.

For visitors close to the AWS region the app is hosted in the latency is low and the connection happens quickly. The packets need to travel a short distance and that is fast.

But for visitors far from the region, potentially in a different continent, the latency is much higher. The result is, especially for the first page, a significantly longer waiting time.

Let's see how adding CloudFront to the mix speeds up connections for this second group of visitors!

Physical setup

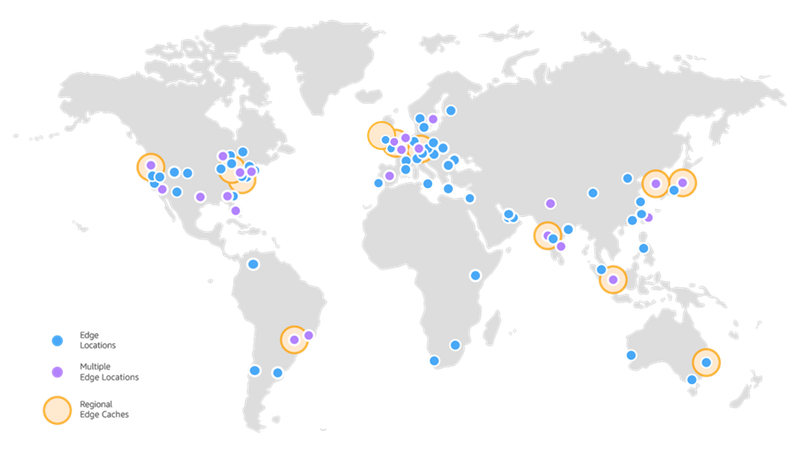

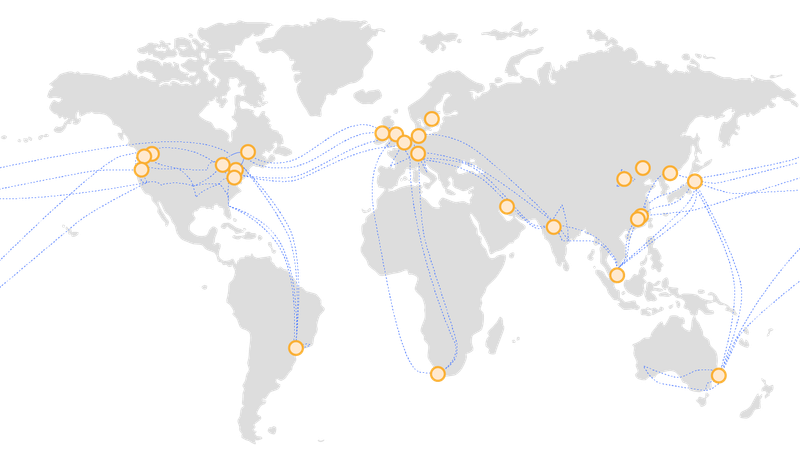

CloudFront uses the edge network of AWS, consisting of more than 200 data centers all over the world.

(Image taken from https://aws.amazon.com/cloudfront/features/)

When visitors connect to a CloudFront distribution, they are connecting to an edge that is closest to them. This means no matter where your webapp is hosted, the connection will be made to a server that is close.

Shorter handshakes

Having data centers closer to users is nice, but the data still lives in the central region, so packets still need to travel all the way. How does adding a new box to the architecture lowers latency for faraway visitors then?

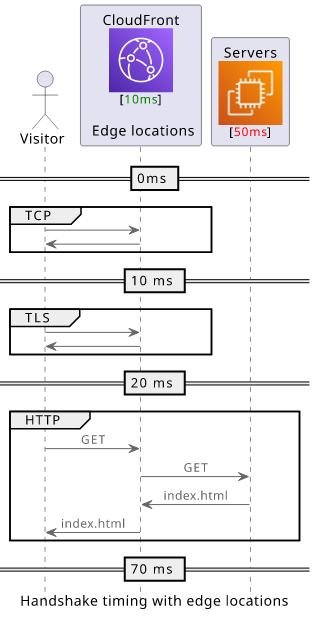

The answer is that it makes handshakes shorter.

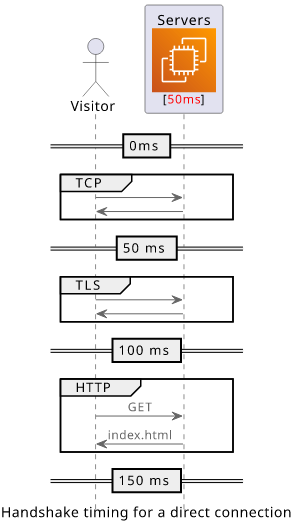

When a visitor goes to your site, the browser needs to establish a connection before it can send the HTTP request. Not counting the DNS resolution, there is one roundtrip needed for the TCP connection, and an additional for the TLS. Versions preceding TLSv1.3 require 2 roundtrips for the TLS connection.

When the server is closer each roundtrip is shorter, and every millisecond saved has an outsized effect on the connection time. The only request that needs to travel the full distance is the HTTP request/response, but the 3 before that need to go only to the edge location.

The edge server needs to establish a connection to the central server (called the origin connection) but this can be reused across the visitor connections. If there is a steady stream of traffic then no one needs to wait for the long handshakes.

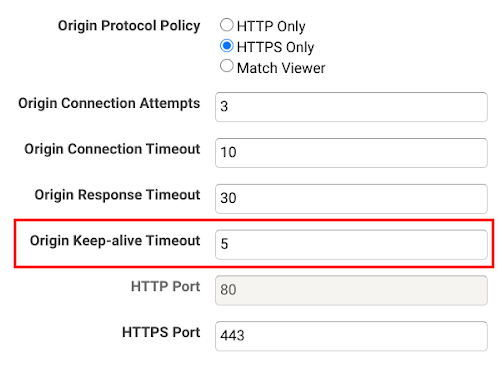

For a custom origin you can configure how long this connection will be kept open using the Origin Keep-alive Timeout setting:

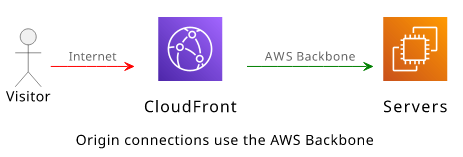

Backbone network

Apart from reusing the origin connection between users, edge locations also have an advantage in terms of connection speed and predictability. They use the AWS backbone network, which is a web of optical cables connecting AWS data centers all over the world with a dedicated connection.

(Image taken from https://aws.amazon.com/about-aws/global-infrastructure/global_network/)

When an edge location forwards the request to the origin server it uses the backbone network instead of the public internet. Packets travel roughly the same distance, but on a congestion-free connection.

While the public internet is an oversubscribed network where congestions happen, meaning the packets can be delayed or lost when a link is overloaded, on a congestion-free network this can not happen (at least during normal operation). The result is less variability in latency, also called jitter.

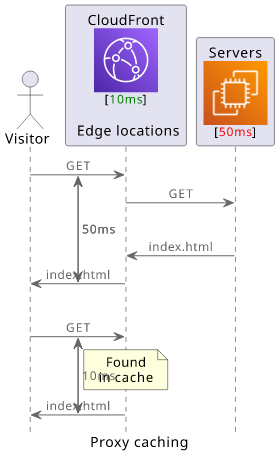

Proxy caching

Apart from bringing connections close to users and using a dedicated network, CloudFront also allows caching on the edge. This is called edge caching or proxy caching.

When a file has been already requested by a user, based on the cache behavior configuration, the edge can choose not to contact the origin for a later request for the same file but use its local cache. This completely eliminates the distance effect on latency.

While it's a powerful concept, it can result in stalled content or even security vulnerabilities.

Conclusion

Using CloudFront adds a global network of data centers to your content delivery pipeline. This brings connections closer to the visitors, no matter how far they are from the central servers. This setup shortens the TCP and the TLS handshakes and with the reuse of the edge <-> server connection between users this significantly reduces the response time for the first request.

Edge locations connect to AWS regions via the AWS backbone network, which offers a dedicated, congestion-free connection. This results in more predictable latency.

Finally, edge locations can also cache content close to users. This can completely eliminate the distance effect for some content.