AWS: How to limit Lambda and API Gateway scalability

You don't need a cloud-scale environment for development

Background

When you develop a new serverless function or an API, you should limit the scalability. You don't need the whole cloud to power a function that you call by hand once every few seconds. On the other hand, scary stories about a runaway Lambda function generated a bill so large it would deter anyone from trying out cloud-scale computing are plenty.

During development, limit the resources available to you. Software engineering is mostly about trying things out, and you want to do that without the fear of such large bills. For the production environment you can release these limits, hopefully your codebase is tested enough that such mayhem is unlikely to happen.

The two services mainly related to serverless computing are Lambda and API Gateway. You can place restraints on both of them, and you should.

Limits

The two services use an entirely different model for limiting executions. And their pricing structure is so different you wouldn't think they are designed by the same company.

This yields different means of imposing limits on their scalability. Let's see their pricing model and a back-of-the-envelope calculation for the worst case scenario.

Lambda limits

Lambda uses the notion of concurrent executions, which are the number of servers processing the requests. The default limit is 1000 and it is an account-wide restriction.

As this is the limit on concurrency, the exact amount of calls depends on the length of each execution. For example, an average of 100ms means the upper limit is 10k requests/sec.

But since the pricing model is also based on the execution time, this puts a limits on the costs.

To calculate the total cost for Lambda, we need to consider the allocated memory for the function. This goes from 128MB up until 3008MB.

Let's see the impact on the bottom line if all hell broke loose!

The current pricing is $0.00001667 / GB-sec. The total cost for the duration of the functions for every hour for the two extemes of memory allocations are then:

- 0.128 * $0.00001667 * 1000 * 60 * 60 = $7.68 / h

- 3.008 * $0.00001667 * 1000 * 60 * 60 = $180.52 / h

There is another pricing factor that is dependent on the number of executions, but usually that does not play a significant role.

We can then assume that if something goes wrong, the function will consume between $7 and $180 an hour.

API Gateway limits

API Gateway, on the other hand, puts limits on the number of requests, irrespective of their length. It uses an algorithm called the token bucket algorithm.

There are two parameters influencing the throttling. The first is the steady-state limit which determines how many requests per second can be served if they come in continually for a long time. The other one is the burst limit which is the amount of deviation allowed. The default is 10000/sec for the steady-state limit and 5000 for bursting.

For the pricing, the steady-state limit is more important. The current price is $3.5 for every million requests. The worst-case cost for an hour is then:

- 10000 * 60 * 60 * $3.5 / 1000000 = $126 / h

Limiting

Just like the pricing is entirely different for the two services, the options to limit their scalability also show no resemblance.

Limiting Lambda

Lambda uses the notion of reserved concurrency. In my mind, when something is "reserved" that means a guaranteed minimum. When you reserve a table at a restaurant, you can still use other ones on the condition that they are unoccupied.

But in AWS terms, "reserved" also means "maximum". If you configure a function to use reserved concurrency then it will have access only to that pool.

The concurrency pool is an account-wide resource which reservations deplete. If you use a lot of rate-limited functions, that would affect non-rate-limited ones too. But for safeguarding development, you should be fine.

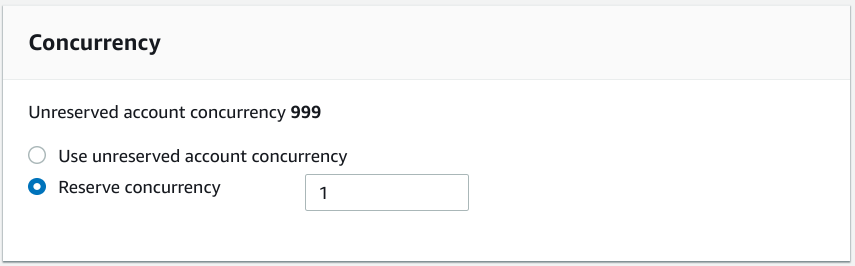

On the Console, under the function settings, you'll find the Concurrency settings:

If you use CloudFormation, look for the ReservedConcurrentExecutions. This sets the same parameter.

Resources:

LambdaFunction:

Type: AWS::Lambda::Function

Properties:

...

ReservedConcurrentExecutions: 1What is a good value to set it?

Setting it to 1 can be too low even for development. But I believe anything more than 10 would be an overkill.

Limiting API Gateway

For API Gateway you can set the two kinds of limits for a Stage. If you want more granular control, you can also override them for individual methods.

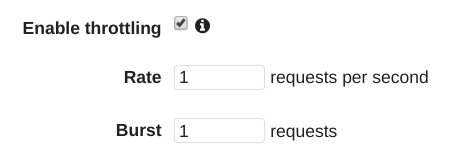

On the Console, select the Stage and enable throttling:

If you use CloudFormation, look for the MethodSettings for the Stage, and define the ThrottlingBurstLimit/ThrottlingRateLimit:

Resources:

Limiting:

Type: AWS::ApiGateway::Stage

Properties:

...

MethodSettings:

- ResourcePath: "/*"

HttpMethod: "*"

ThrottlingBurstLimit: 1

ThrottlingRateLimit: 1What would be good values for development?

Set the steady-limit to some longer-term maximum, even 1/sec should be enough. On the other hand, increase the burst limit to the thousands. With this setup, it is unlikely you'll ever hit limiting, but in case a runaway process hits the API too hard it only depletes the burst limit with negligible costs incurred.

Conclusion

Wielding the full power of the cloud, you can easily find yourself in a costly situation. Fortunately, it is not hard to do something about it, and I suggest to start every new project with caps on resource usage. This makes sure you won't be the next one appealing to AWS support to reduce the damage.