Automatically schedule emails on MailChimp with RSS and AWS Lambda

How to create an infinite newsletter

Automatic newsletter

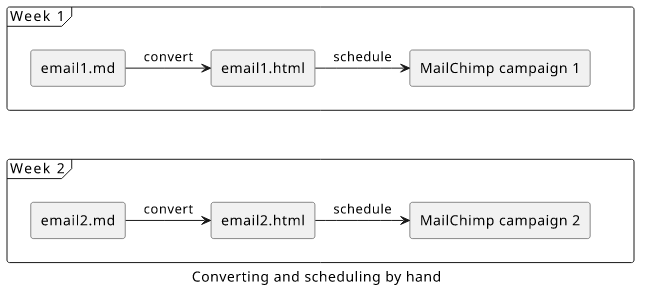

For quite some time now, I've been writing the JS Tips newsletter, which is a WebDev-related tip sent out every week. Initially, I scheduled the tips manually, creating an email campaign on MailChimp every week. The emails are in Markdown, so I needed to generate the HTML, insert it into a new mail, fill in the title and optionally the preview text, and finally set the time. It didn't take a lot of time, but it's tedious to do it by hand. No wonder I wanted to automate it, both the Markdown -> HTML conversion and the scheduling.

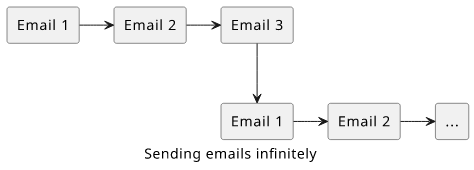

So I had a bunch of emails, and I wanted an automated solution that sends them out to the audience one at a time on a weekly basis. Then when all of the mails are sent, it starts over from the first one, effectively creating an infinite loop.

This way, when people sign up they'll start getting emails from that point and eventually, they'll get all the content.

Content considerations

A solution like this is perfect for non-time-sensitive and non-linear content, which can be a lot of things. A few examples are tips to do something, language features of a programming language, independent lessons for regex features, phrases for learning a foreign language or even just short, independent stories. Anything that does not need to be consumed in a serialized form.

What kind of content is not a good fit?

A serialized newsletter, where one email builds upon the previous one, such as a lesson series. For this, you can use MailChimp Automations.

Another type that is not a good fit is time-sensitive emails, like news. You don't want to send old content to subscribers.

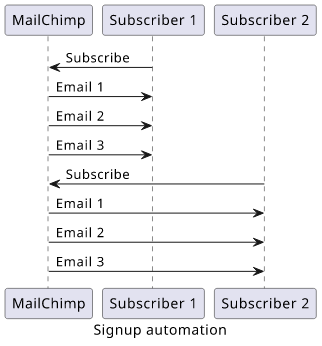

Alternative: Signup Automation

The solution I'll describe below uses RSS, which is independent of MailChimp. An alternative solution would use MailChimp's signup Automation where after signing up, the recipient gets the predefined series of emails.

Signup Automation lets you define a series of emails to send to new subscribers. You can then add all your emails and you can be sure recipients will get them in order.

The upside is ordering. You can have a linear content that builds on the previous email.

But there are two downsides. First, it won't go infinitely as no matter how many emails you add, the automation runs out at one point. Especially if you have a lot of emails, people forget about a specific one by the time it comes around. You can say that it's just noise after that, but people can unsubscribe when they don't get any value out of the emails. And your newsletter won't dry up at one point.

The second and bigger downside is that subscribers are out-of-sync, which makes keeping the content up-to-date more difficult. If you have 100 emails in your newsletter and the subscribers are distributed in time, you need to do regular checks to make sure everything is still relevant. With synchronized sending, you just need to make sure the next one is updated.

Let's dive into the solution!

RSS

The solution is based on an RSS feed and MailChimp's "blog updates" automation. Using the MailChimp API to automatically schedule emails would also work, but I prefer solutions that are less vendor-dependent.

rss.xml

RSS is a machine-readable format for content updates, especially suited to notify systems about blog updates. While it is less used by end-users now, exemplified by the shut down of Google Reader, it is widely supported by blog engines, such as WordPress, Jekyll, Ghost, Medium, just to name a few.

Its implementation is a file called rss.xml with some information about the blog itself and its latest contents.

On MailChimp's side, it can send emails for blog updates, making it easy to automate a newsletter. And it uses the RSS feed of the blog to know when a new article is published.

RSS is in XML format with a defined structure:

<?xml version="1.0" encoding="UTF-8"?>

<rss

xmlns:dc="http://purl.org/dc/elements/1.1/"

xmlns:content="http://purl.org/rss/1.0/modules/content/"

xmlns:atom="http://www.w3.org/2005/Atom"

version="2.0"

>

<channel>

<title><![CDATA[...blog title]]></title>

<item>

<title><![CDATA[...post title]]></title>

<description><![CDATA[

...post contents

]]></description>

<guid isPermaLink="false">...guid</guid>

<pubDate>Wed, 25 Dec 2019 00:00:00 GMT</pubDate>

</item>

</channel>

</rss>

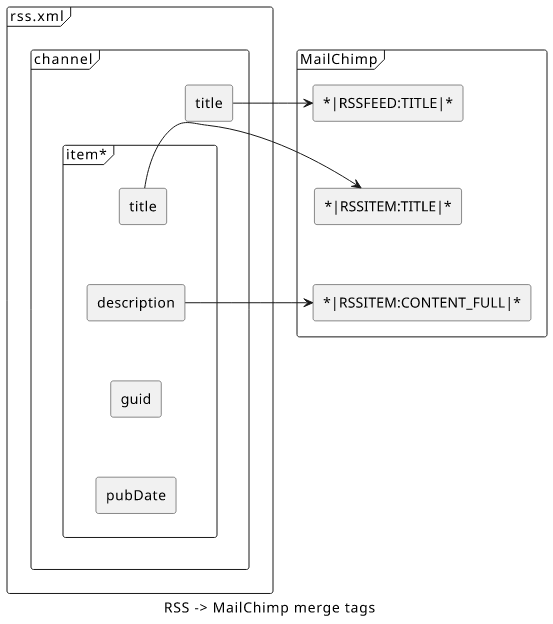

It has the blog's title (channel/title), the blog posts (channel/item*), the individual posts' titles (channel/item/title), the contents

(channel/item/description), the publication date (channel/item/pubDate), and a guid (channel/item/guid). The guid is the identifier of

the post, as everything else can change it makes sure that consumers of the RSS channel do not get duplicated content.

There are other properties, but these are the important ones for this solution.

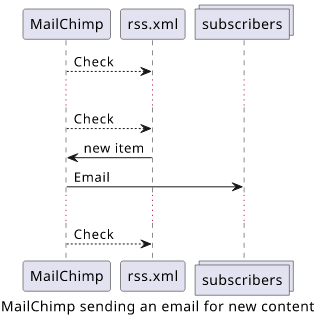

MailChimp RSS support

MailChimp periodically (usually once per day) fetches the rss.xml file and check if any new entries are found. It knows the last time it fetched

the contents, and compare the pubDate and the guid properties inside the item.

When MailChimp finds a new entry, it compiles an email based on the template you define on MailChimp's side. In the template, you can use placeholder values that

will be substituted with the corresponding data from the rss.xml before sending the email.

There are several such merge tags, but we'll use only a few:

*|RSSFEED:TITLE|*is the blog's title, which is thechannel/title*|RSSITEM:TITLE|*is the item's title, which is thechannel/item/title*|RSSITEM:CONTENT_FULL|*is the item's body, which is thechannel/item/description

Generating emails with RSS

RSS was designed for blogs in mind, but who said an rss.xml can't be auto-generated?

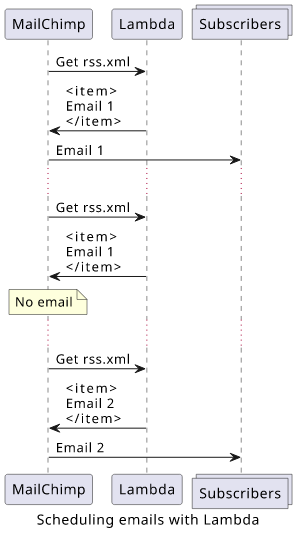

The idea is this: generate an rss.xml with Lambda and return a single item with the content you want to send out. Then configure MailChimp

to regularly check this feed and send out an email to the audience when this item changes.

For example, if you have 3 emails you want to send out, one every day, and repeat them infinitely, then on the first day the rss.xml contains

one item with the content of the first email, with a publication date and guid of that day. On the second day, the single item's content

is the second email's, and the publication date and guid is the date of that day. Same on the third day but for the third email. Then on the fourth day,

the item's content is the first email's content, but the publication date and the guid are that of the current day's.

description |

pubDate |

||

|---|---|---|---|

| Day 1 | Email 1 | Day 1 | Email 1 |

| Day 2 | Email 2 | Day 2 | Email 2 |

| Day 3 | Email 3 | Day 3 | Email 3 |

| Day 4 | Email 1 | Day 4 | Email 1 |

| Day 5 | Email 2 | Day 5 | Email 2 |

MailChimp will see a new blog entry whenever Lambda returns a new item, pastes the content and the title using merge tags and sends out an email to the audience, fully automated.

With this solution, you can have a rotating email newsletter, sending emails out without any manual work.

Lambda implementation

First, let's see how a Lambda implementation would look like! You can find the whole code in this GitHub repo.

This solution is a Lambda function behind a public API Gateway endpoint, all managed by Terraform. Since the API Gateway and the Terraform config follows a fairly standard structure, I'll focus on the Lambda function in this article. You can find the full implementation in the repository.

Directory structure

The main.tf is the primary entry point for Terraform and it defines all resources and configurations.

The Lambda implementation is in src/index.js, while the dependencies are defined in src/package.json and src/package-lock.json.

The contents of the emails are in the src/content/1.html, 2.html, 10.html.

Npm libraries

The rss package offers a concise API to generate the rss.xml. I've found that even if it seems like a simple enough case

to just concatenate strings, it's usually a good idea to use a library that does the escaping.

Also, there is gray-matter to parse the title of the email out of the front-matter.

Using front-matter to define metadata allows a self-contained file:

---

title: First email

---

<style>

.s {

color: red;

}

</style>

<h1>This is the first item</h1>

<p>

<div class="s">

This should be red

</div>

</p>

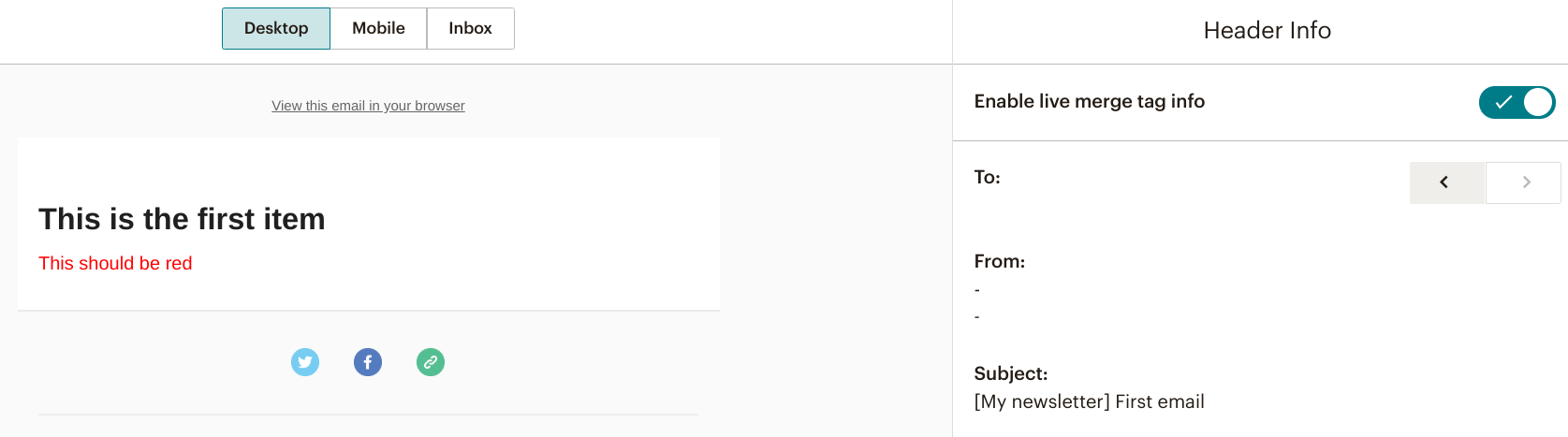

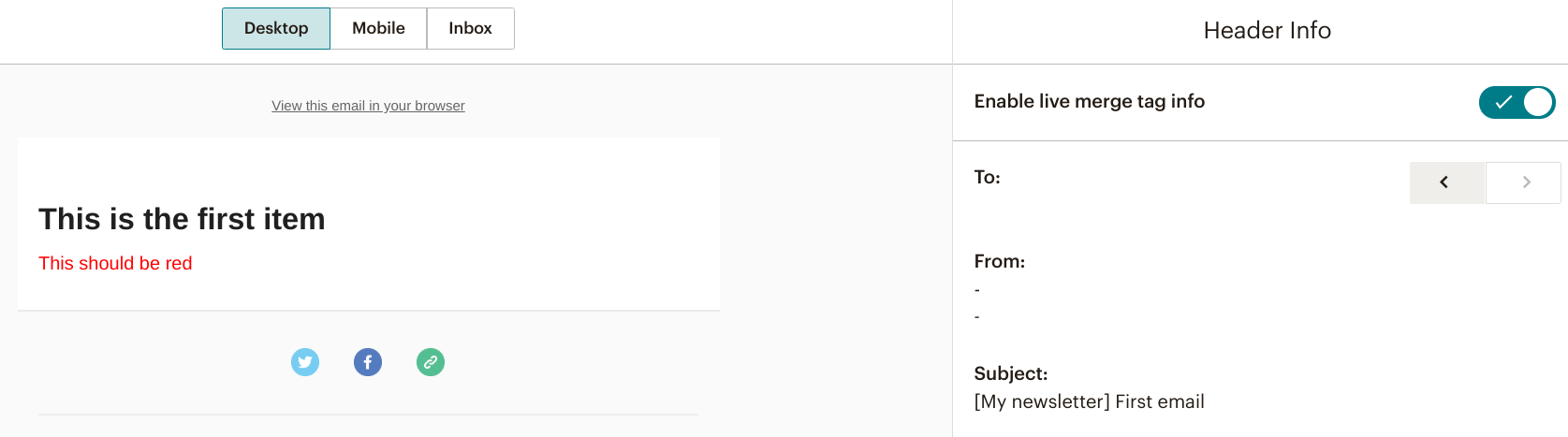

Which MailChimp will render like this (note the Subject line too):

Two things to note here. First, the title: part on the top is part of the subject of the email.

Also note the <style> block that defines CSS rules. I've found that style blocks might not be supported by emails, so it's better to convert it

to style="..." attributes. The inline-css library does this.

And finally, moment helps with date manipulation with a nice API.

While in this example the emails are in HTML, it's quite easy to add Markdown support too.

Lambda boilerplate

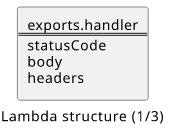

Let's move on to the actual implementation!

The first step is to interface with API Gateway by providing a result object that it expects.

exports.handler = async (event, context) => {

const feed = await getRSS();

return {

statusCode: 200,

body: feed,

headers: {

"Content-Type": "application/rss+xml",

},

};

};

This calls the getRSS() function and adds the required properties to the result.

Separating the parts specific to API Gateway allows a separate entry point to call the main logic. This helps with development by calling it without deployment:

(async () => {

console.log(await getRSS());

})();

Just don't forget to either move it to a separate file or remove it before deployment.

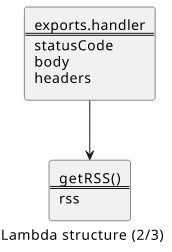

RSS channel

The getRSS function returns the XML as a string.

const getRSS = async () => {

const {content, date, title} = await getContent();

const feed = new RSS({

title: "My newsletter",

});

feed.item({

title,

guid: date.toString(),

date,

description: content,

});

return feed.xml();

};

The current content, date, and title are returned from the getContent() call, then this function creates the RSS feed structure. The

feed's title will be the value of the *|RSSFEED:TITLE|* merge tag, which can be used to build part of the email subject.

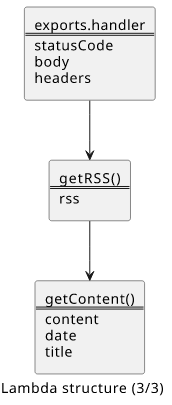

Item

The bulk of the magic is in the getContent() implementation which generates the current item.

Let's build it piece-by-piece!

const getContent = async () => {

// ...

return {

// ...

};

};

First, calculate the number of days since the newsletter started:

const startDate = moment("2019-12-15");

const currentDate = moment().startOf("day");

const difference = currentDate.diff(startDate, "days");

// ...

return {

date: currentDate.toDate(),

// ...

};

This defines a newsletter with a new email every day. But this is just up to the implementation to define a different timing.

To send an email every week, then calculate the difference in weeks, then don't forget to take this into account for the publication date:

const difference = currentDate.diff(startDate, "days");

const differenceWeeks = Math.floor(difference / 7);

// use differenceWeeks to calculate the current item index

// ...

return {

date: currentDate.subtract(difference % 7, "days").toDate(),

// ...

};

The next step is to read the corresponding file.

As the emails are in the contents folder and their names define the ordering (1.html, 2.html, ...), make sure to use a numerical-aware

ordering when sorting the filenames so that 10 will be after 2. Fortunately, Javascript has built-in support for this

with the comparator function new Intl.Collator(undefined, {numeric: true}).compare.

To get the filename indexed by the difference in days:

const files = (await util.promisify(fs.readdir)("content"))

.sort(new Intl.Collator(undefined, {numeric: true}).compare);

const currentFile = files[difference % files.length];

Then with the filename, read the title and the contents:

const {content, data: {title}} =

matter(await util.promisify(fs.readFile)(`content/${currentFile}`, "utf8"));

return {

title,

// ...

};

Finally, run inline-css in case any <style> blocks are defined:

return {

title,

content: await inlineCss(content, {url: " "}),

date: currentDate.toDate(),

};

The whole implementation looks like this:

const getContent = async () => {

const startDate = moment("2019-12-15");

const currentDate = moment().startOf("day");

const difference = currentDate.diff(startDate, "days");

const files = (await util.promisify(fs.readdir)("content"))

.sort(new Intl.Collator(undefined, {numeric: true}).compare);

const currentFile = files[difference % files.length];

const {content, data: {title}} =

matter(await util.promisify(fs.readFile)(`content/${currentFile}`, "utf8"));

return {

title,

content: await inlineCss(content, {url: " "}),

date: currentDate.toDate(),

};

};

Deploy

To deploy the function to AWS you need to configure an API Gateway and do the usual chores of providing a HTTP API for a function. This is out-of-scope for this article, as there is nothing special here. See the implementation in the GitHub project.

The sample code uses an external data source to automatically fetch the NPM dependencies, so you don't need to run it manually. Of course, you need npm installed on the machine, as well as Terraform.

To deploy the sample project, run:

terraform initterraform apply- The output URL is the

rss.xmlyou'll need to configure in MailChimp

MailChimp configuration

Now that there is a generated rss.xml, let's move on to MailChimp-side!

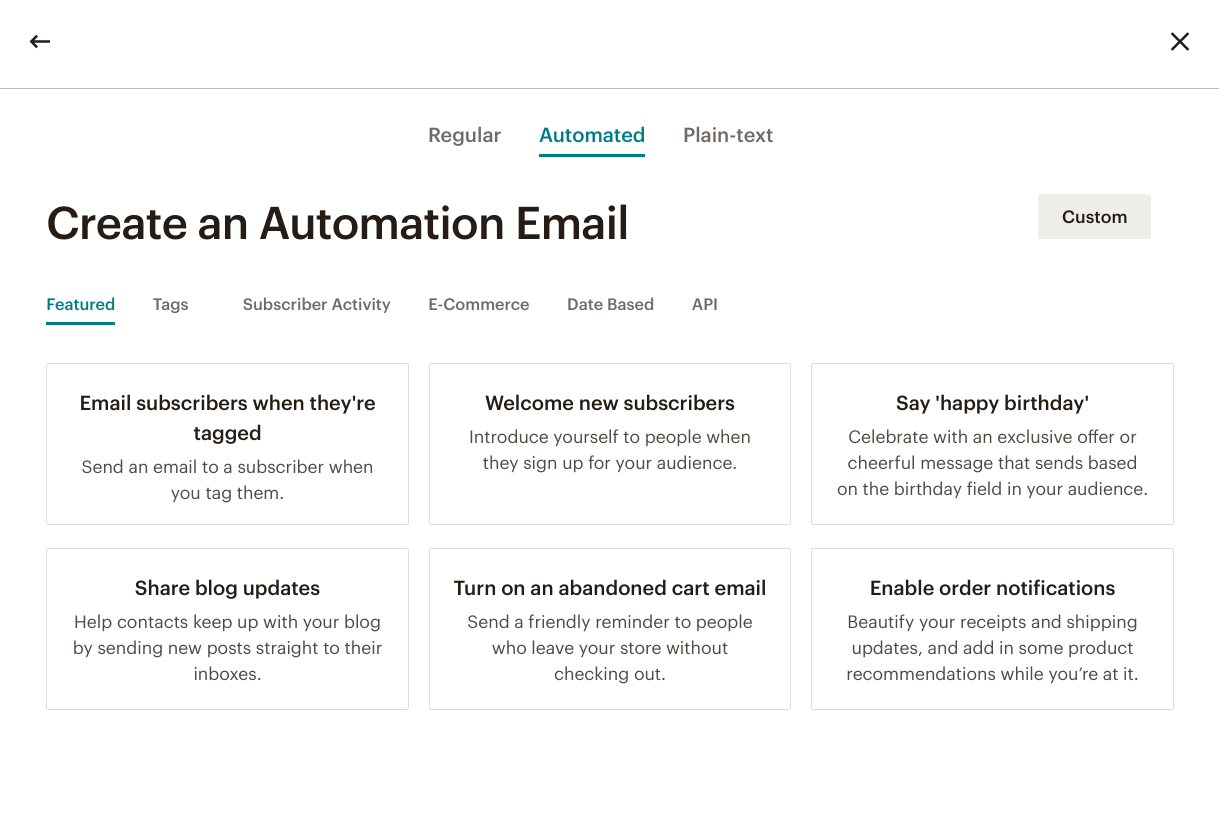

In your MailChimp account, go to Campaigns and create a new campaign:

Select Email:

Under the Automated tab, select "Share blog updates":

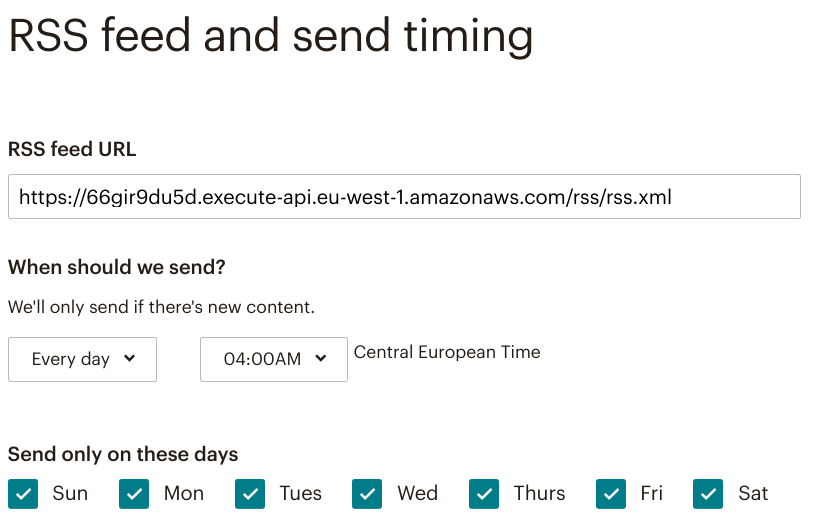

The important input is the "RSS feed URL", which is the URL output from Terraform. For the time, select "Every day" even if you do a weekly newsletter. This controls how often MailChimp checks for updates and that should be as often as possible. The periodicity of the newsletter should be controlled by the Lambda function.

After the RSS settings, configure the campaign just like you would any other campaign and use merge tags for dynamic data.

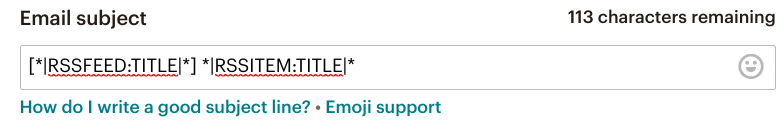

For the subject line, I suggest [*|RSSFEED:TITLE|*] *|RSSITEM:TITLE|*, which generates [Newsletter title] Email title.

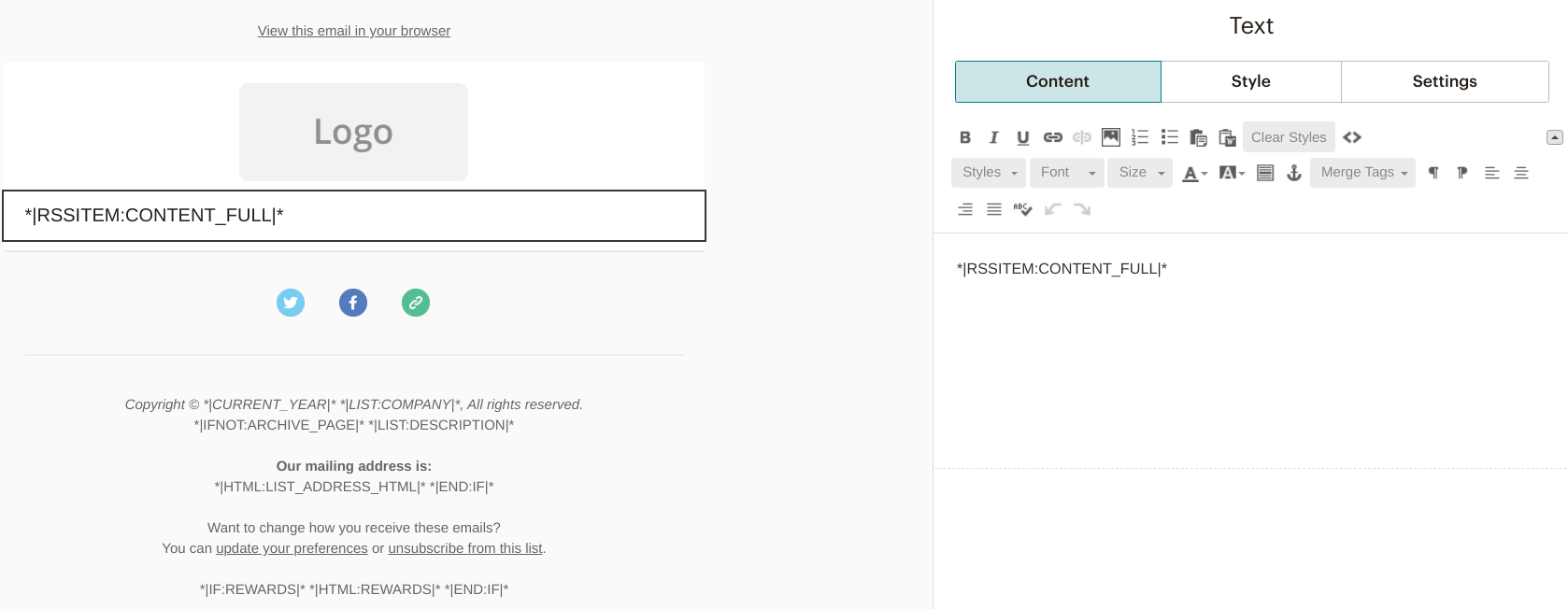

For the email contents, use the *|RSSITEM:CONTENT_FULL|* merge tag to insert the contents of the email:

Also, check the preview to make sure everything looks good:

Then start the campaign and offer visitors a place to subscribe. They will get the emails from the time they are subscribed.

Testing

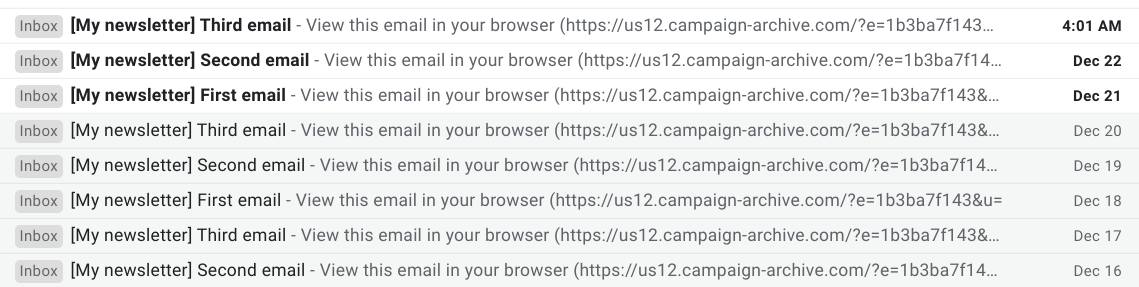

I let the sample project run for a while, and I got the emails like clockwork.

As you can see, it starts over from the first one when it reaches the last email.

Cost

Lambda is billed for usage, and as MailChimp checks the feed once per day per campaign, it will run for a few seconds a day. This is a minuscule amount.

The same goes for the bandwidth cost.

As for MailChimp, you pay for the audience and emails sent, an RSS-based sending solution brings no additional costs.

Bottom line: practically free.

Extras

Checking emails before sending

Since the same emails are sent out over and over again, they might require some updates. Links might get dead, new versions of software released, or the content goes out-of-date. That's why I like to get the next email before it goes out to everybody else. This way I have the chance to fix any problems and the subscribers only get up-to-date content.

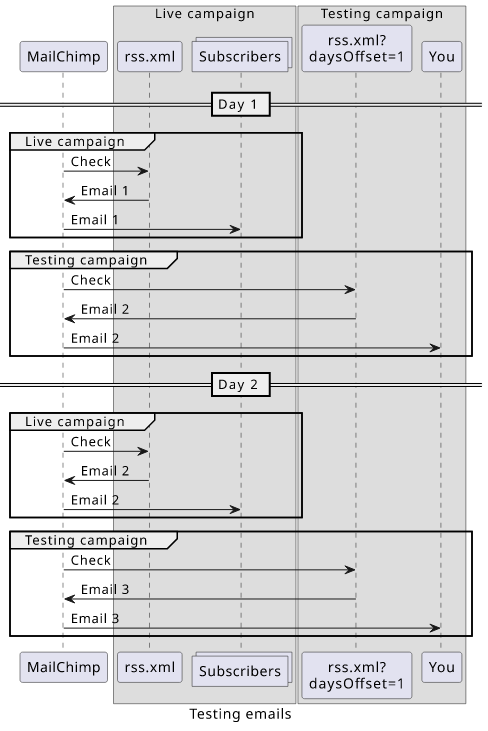

To solve this, I could've used a separate function and duplicate everything, but I opted for a query parameter that shifts the date of the epoch by a

number of days. Then I added a new MailChimp campaign with a segment that has only me as the recipient.

The <rss url> that Terraform outputs is the real feed that everybody gets. But the <rss url>?daysOffset=3 is another feed that sends out the emails

3 days prior. This is the feed for the testing campaign.

To add a parameter to Lambda, get it from the queryStringParameters:

const daysOffset = (() => {

if (event.queryStringParameters) {

return Number(event.queryStringParameters.daysOffset);

}else {

return 0;

}

})();

Then when calculating the time of the first email, subtract this many days:

const epoch = moment("2017-04-13").subtract(daysOffset, "days");

With this solution, you are not constrained to a single testing feed. You can use <rss url>?daysOffset=1, along with <rss url>?daysOffset=3 and 5.

On the MailChimp side, create a new campaign for each testing email. Make sure to filter the audience so that only you will get these emails.

Optionally, give it a distinctive title, such as "[*|RSSFEED:TITLE|* -1] *|RSSITEM:TITLE|*" and "[*|RSSFEED:TITLE|* -3] *|RSSITEM:TITLE|*" so that

it's immediately obvious in how many days the email will go live.

You can have any number of such testing campaigns.

Let's talk about the security implications of adding this parameter!

While the RSS URL does not show in the emails that go to the audience, it is not a secret either. With the daysOffset parameter, if somebody

gets access to the URL, he can fetch all the email at once.

Because of this, it's a good idea to specify a limit to it, just like for any user-provided value:

if (daysOffset > 10 || daysOffset < 0) {

throw new Error();

}

Image support

I did not need to include images to my email series, but it should be possible. Since the content is generated by the Lambda function over which you have full control, it shouldn't be hard to include images.

I would make sure that they are defined as data URIs, so instead of <img src="image.jpg">, it is <img src="data:image/jpg;base64,...".

This way there is no need to add another endpoint just to return the images.

Adding and removing emails

The solution described above is entirely stateless. It does not record when a particular email goes out and also does not keep track of future emails. This makes it easier to deploy the solution, and you don't need to worry about losing a database if you make changes.

But what happens if you add a new email to the series?

In this case, the previous cycles will be longer, and that will result in a jump backward in the queue. For example, if you have sent out your email series twice, adding an email will result in a jump back 2 emails.

The same happens for removing an email, just in reverse. Subscribers will experience a jump forward in the queue. This might be the less problematic case as they don't know what email they did not get.

How to add or remove emails then?

Make sure you change the epoch value also. Change the date to the imaginary first email of the modified series.

Conclusion

This is an effective and user-friendly way to send content to an arbitrary email list. It requires a bit of planning, especially when you need to add or remove parts of the sequence, but then you can practically forget about it.

You can also add bells and whistles, like Markdown support, images, even dynamic content.